使用 PyMC 将强化学习模型拟合到行为数据#

强化学习模型常用于行为研究,以模拟动物和人类在重复选择并获得某种形式反馈(例如奖励或惩罚)的情况下如何学习。

在本笔记本中,我们将考虑最简单的学习场景,其中只有两种可能的动作。当采取一个动作时,总是会立即获得奖励。最后,每个动作的结果与之前采取的动作无关。这种情况有时被称为多臂老虎机问题。

假设两个动作(例如,左按钮和右按钮)分别在 40% 和 60% 的时间内与单位奖励相关联。一开始,学习代理不知道哪个动作 \(a\) 更好,因此他们可能会假设两个动作的平均值均为 50%。我们可以将这些值存储在一个表中,该表通常被称为 \(Q\) 表

当选择一个动作并观察到奖励 \(r = \{0,1\}\) 时,该动作的估计值将按如下方式更新

其中 \(\alpha \in [0, 1]\) 是一个学习参数,它影响每个试验中动作的值向观察到的奖励偏移多少。最后,\(Q\) 表值通过 softmax 转换转换为动作概率

其中 \(\beta \in (0, +\infty)\) 参数决定了代理选择中的噪声水平。较大的值将与更确定的选择相关联,而较小的值将与越来越随机的选择相关联。

import arviz as az

import matplotlib.pyplot as plt

import numpy as np

import pymc as pm

import pytensor

import pytensor.tensor as pt

import scipy

from matplotlib.lines import Line2D

seed = sum(map(ord, "RL_PyMC"))

rng = np.random.default_rng(seed)

az.style.use("arviz-darkgrid")

%config InlineBackend.figure_format = "retina"

生成虚假数据#

def generate_data(rng, alpha, beta, n=100, p_r=None):

if p_r is None:

p_r = [0.4, 0.6]

actions = np.zeros(n, dtype="int")

rewards = np.zeros(n, dtype="int")

Qs = np.zeros((n, 2))

# Initialize Q table

Q = np.array([0.5, 0.5])

for i in range(n):

# Apply the Softmax transformation

exp_Q = np.exp(beta * Q)

prob_a = exp_Q / np.sum(exp_Q)

# Simulate choice and reward

a = rng.choice([0, 1], p=prob_a)

r = rng.random() < p_r[a]

# Update Q table

Q[a] = Q[a] + alpha * (r - Q[a])

# Store values

actions[i] = a

rewards[i] = r

Qs[i] = Q.copy()

return actions, rewards, Qs

true_alpha = 0.5

true_beta = 5

n = 150

actions, rewards, Qs = generate_data(rng, true_alpha, true_beta, n)

显示代码单元格源

_, ax = plt.subplots(figsize=(12, 5))

x = np.arange(len(actions))

ax.plot(x, Qs[:, 0] - 0.5 + 0, c="C0", lw=3, alpha=0.3)

ax.plot(x, Qs[:, 1] - 0.5 + 1, c="C1", lw=3, alpha=0.3)

s = 7

lw = 2

cond = (actions == 0) & (rewards == 0)

ax.plot(x[cond], actions[cond], "o", ms=s, mfc="None", mec="C0", mew=lw)

cond = (actions == 0) & (rewards == 1)

ax.plot(x[cond], actions[cond], "o", ms=s, mfc="C0", mec="C0", mew=lw)

cond = (actions == 1) & (rewards == 0)

ax.plot(x[cond], actions[cond], "o", ms=s, mfc="None", mec="C1", mew=lw)

cond = (actions == 1) & (rewards == 1)

ax.plot(x[cond], actions[cond], "o", ms=s, mfc="C1", mec="C1", mew=lw)

ax.set_yticks([0, 1], ["left", "right"])

ax.set_ylim(-1, 2)

ax.set_ylabel("action")

ax.set_xlabel("trial")

reward_artist = Line2D([], [], c="k", ls="none", marker="o", ms=s, mew=lw, label="Reward")

no_reward_artist = Line2D(

[], [], ls="none", marker="o", mfc="w", mec="k", ms=s, mew=lw, label="No reward"

)

Qvalue_artist = Line2D([], [], c="k", ls="-", lw=3, alpha=0.3, label="Qvalue (centered)")

ax.legend(handles=[no_reward_artist, Qvalue_artist, reward_artist], fontsize=12, loc=(1.01, 0.27));

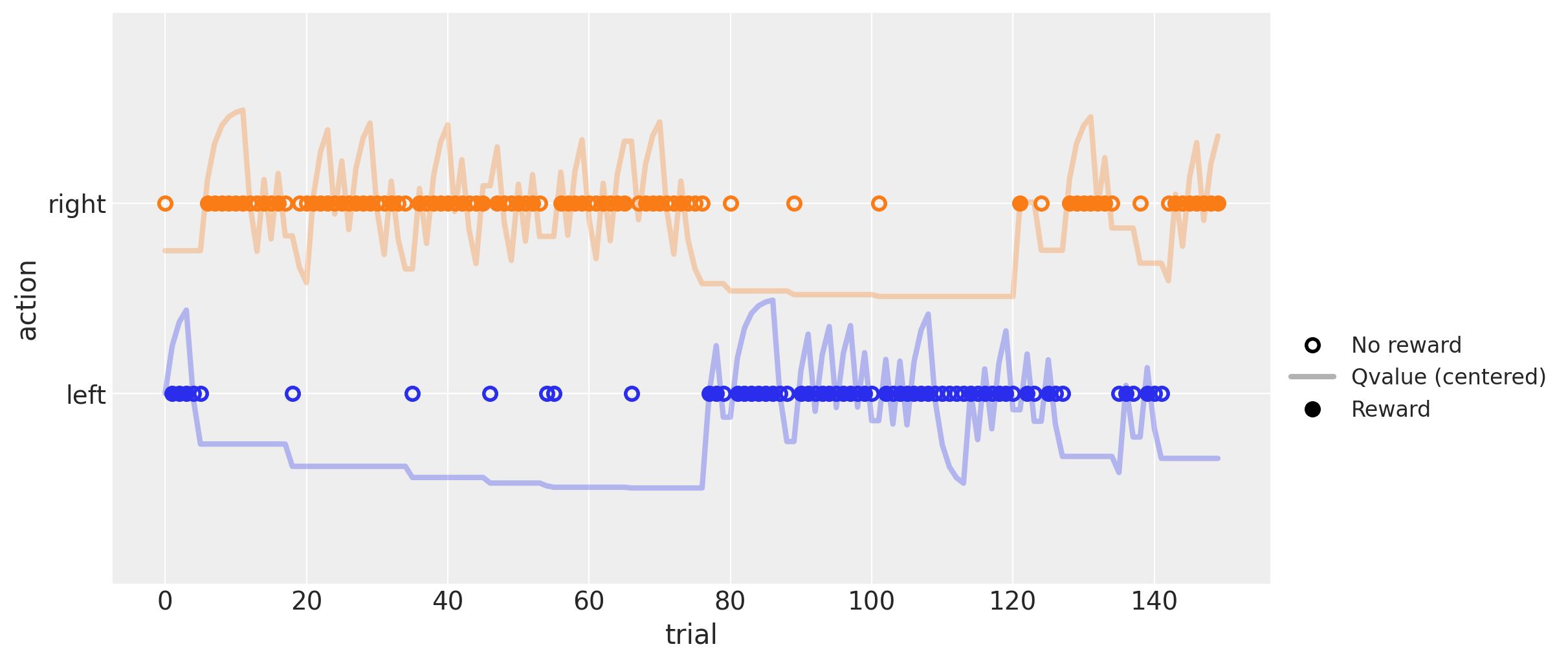

上面的图显示了 150 次试验的模拟运行,参数为 \(\alpha = .5\) 和 \(\beta = 5\),左(蓝色)和右(橙色)动作的恒定奖励概率分别为 \(.4\) 和 \(.6\)。

实心点和空心点分别表示后跟奖励和无奖励的动作。实线显示每个动作的估计 \(Q\) 值,该值以各自颜色的点为中心(当各自的 \(Q\) 值高于 \(.5\) 时,该线在其点上方,否则在其下方)。可以看出,该值随着奖励(实心点)而增加,随着无奖励(空心点)而减少。

每次结果后线条高度的变化与 \(\alpha\) 参数直接相关。\(\beta\) 参数的影响更难以掌握,但一种思考方式是,其值越高,代理将越坚持具有最高估计值的动作,即使两者之间的差异非常小。相反,当此值接近于零时,代理将开始在两个动作之间随机选择,而不管它们的估计值如何。

通过最大似然估计学习参数#

生成数据后,目标是现在“反转模型”以估计学习参数 \(\alpha\) 和 \(\beta\)。我首先通过最大似然估计 (MLE) 来完成它。这需要编写一个自定义函数,该函数计算给定潜在 \(\alpha\) 和 \(\beta\) 以及固定的观察到的动作和奖励的数据的可能性(实际上,该函数计算负对数似然,以避免下溢问题)。

我使用方便的 scipy.optimize.minimize 函数,以快速检索最大化数据似然性的 \(\alpha\) 和 \(\beta\) 值(或者实际上,最小化负对数似然性)。

当我稍后编写在 PyMC 中计算选择概率的 PyTensor 函数时,这也很有帮助。首先,底层逻辑是相同的,唯一改变的是语法。其次,它提供了一种确信我没有搞砸的方法,并且我实际计算的是我想要计算的。

def llik_td(x, *args):

# Extract the arguments as they are passed by scipy.optimize.minimize

alpha, beta = x

actions, rewards = args

# Initialize values

Q = np.array([0.5, 0.5])

logp_actions = np.zeros(len(actions))

for t, (a, r) in enumerate(zip(actions, rewards)):

# Apply the softmax transformation

Q_ = Q * beta

logp_action = Q_ - scipy.special.logsumexp(Q_)

# Store the log probability of the observed action

logp_actions[t] = logp_action[a]

# Update the Q values for the next trial

Q[a] = Q[a] + alpha * (r - Q[a])

# Return the negative log likelihood of all observed actions

return -np.sum(logp_actions[1:])

函数 llik_td 与 generate_data 函数非常相似,除了它在每次试验中存储观察到的动作的对数概率,而不是模拟动作和奖励。

函数 scipy.special.logsumexp 用于以数值更稳定的方式计算项 \(\log(\exp(\beta Q_{\text{right}}) + \exp(\beta Q_{\text{left}}))\)。

最后,该函数返回所有对数概率的负和,这等效于在其原始尺度上乘以概率。

(忽略第一个动作只是为了使输出与稍后的 PyTensor 函数具有可比性。它实际上不会改变任何估计,因为初始概率是固定的,并且不依赖于 \(\alpha\) 或 \(\beta\) 参数。)

llik_td([true_alpha, true_beta], *(actions, rewards))

47.418936097207016

上面,我计算了给定真实 \(\alpha\) 和 \(\beta\) 参数的数据的负对数似然性。

下面,我让 scipy 找到这两个参数的 MLE 值

x0 = [true_alpha, true_beta]

result = scipy.optimize.minimize(llik_td, x0, args=(actions, rewards), method="BFGS")

print(result)

print("")

print(f"MLE: alpha = {result.x[0]:.2f} (true value = {true_alpha})")

print(f"MLE: beta = {result.x[1]:.2f} (true value = {true_beta})")

fun: 47.050814541102895

hess_inv: array([[ 0.00733307, -0.02421781],

[-0.02421781, 0.86969299]])

jac: array([0., 0.])

message: 'Optimization terminated successfully.'

nfev: 30

nit: 7

njev: 10

status: 0

success: True

x: array([0.50473117, 5.7073849 ])

MLE: alpha = 0.50 (true value = 0.5)

MLE: beta = 5.71 (true value = 5)

估计的 MLE 值与真实值相对接近。但是,此过程没有给出关于这些参数值周围合理不确定性的任何概念。为了获得它,我将转向 PyMC 进行贝叶斯后验估计。

但在那之前,我将对对数似然函数实现一个简单的向量化优化,它将更类似于 PyTensor 对应函数。这样做的原因是加快缓慢的贝叶斯推理引擎的速度。

def llik_td_vectorized(x, *args):

# Extract the arguments as they are passed by scipy.optimize.minimize

alpha, beta = x

actions, rewards = args

# Create a list with the Q values of each trial

Qs = np.ones((n, 2), dtype="float64")

Qs[0] = 0.5

for t, (a, r) in enumerate(

zip(actions[:-1], rewards[:-1])

): # The last Q values were never used, so there is no need to compute them

Qs[t + 1, a] = Qs[t, a] + alpha * (r - Qs[t, a])

Qs[t + 1, 1 - a] = Qs[t, 1 - a]

# Apply the softmax transformation in a vectorized way

Qs_ = Qs * beta

logp_actions = Qs_ - scipy.special.logsumexp(Qs_, axis=1)[:, None]

# Return the logp_actions for the observed actions

logp_actions = logp_actions[np.arange(len(actions)), actions]

return -np.sum(logp_actions[1:])

llik_td_vectorized([true_alpha, true_beta], *(actions, rewards))

47.418936097207016

%timeit llik_td([true_alpha, true_beta], *(actions, rewards))

7.13 ms ± 832 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

%timeit llik_td_vectorized([true_alpha, true_beta], *(actions, rewards))

653 µs ± 119 µs per loop (mean ± std. dev. of 7 runs, 1,000 loops each)

向量化函数给出相同的结果,但运行速度快了几乎一个数量级。

当作为 PyTensor 函数实现时,向量化版本和标准版本之间的差异没有那么剧烈。尽管如此,它的运行速度仍然快了两倍,这意味着模型的采样速度也快了两倍!

通过 PyMC 估计学习参数#

最具挑战性的部分是创建 PyTensor 函数/循环,以在使用 PyMC 对参数进行采样时估计 Q 值。

def update_Q(action, reward, Qs, alpha):

"""

This function updates the Q table according to the RL update rule.

It will be called by pytensor.scan to do so recursevely, given the observed data and the alpha parameter

This could have been replaced be the following lamba expression in the pytensor.scan fn argument:

fn=lamba action, reward, Qs, alpha: pt.set_subtensor(Qs[action], Qs[action] + alpha * (reward - Qs[action]))

"""

Qs = pt.set_subtensor(Qs[action], Qs[action] + alpha * (reward - Qs[action]))

return Qs

# Transform the variables into appropriate PyTensor objects

rewards_ = pt.as_tensor_variable(rewards, dtype="int32")

actions_ = pt.as_tensor_variable(actions, dtype="int32")

alpha = pt.scalar("alpha")

beta = pt.scalar("beta")

# Initialize the Q table

Qs = 0.5 * pt.ones((2,), dtype="float64")

# Compute the Q values for each trial

Qs, _ = pytensor.scan(

fn=update_Q, sequences=[actions_, rewards_], outputs_info=[Qs], non_sequences=[alpha]

)

# Apply the softmax transformation

Qs = Qs * beta

logp_actions = Qs - pt.logsumexp(Qs, axis=1, keepdims=True)

# Calculate the negative log likelihod of the observed actions

logp_actions = logp_actions[pt.arange(actions_.shape[0] - 1), actions_[1:]]

neg_loglike = -pt.sum(logp_actions)

让我们将其包装在一个函数中,以测试它是否按预期工作。

pytensor_llik_td = pytensor.function(

inputs=[alpha, beta], outputs=neg_loglike, on_unused_input="ignore"

)

result = pytensor_llik_td(true_alpha, true_beta)

float(result)

47.418936097206995

获得了相同的结果,因此我们可以确信 PyTensor 循环按预期工作。我们现在准备好实现 PyMC 模型。

def pytensor_llik_td(alpha, beta, actions, rewards):

rewards = pt.as_tensor_variable(rewards, dtype="int32")

actions = pt.as_tensor_variable(actions, dtype="int32")

# Compute the Qs values

Qs = 0.5 * pt.ones((2,), dtype="float64")

Qs, updates = pytensor.scan(

fn=update_Q, sequences=[actions, rewards], outputs_info=[Qs], non_sequences=[alpha]

)

# Apply the sotfmax transformation

Qs = Qs[:-1] * beta

logp_actions = Qs - pt.logsumexp(Qs, axis=1, keepdims=True)

# Calculate the log likelihood of the observed actions

logp_actions = logp_actions[pt.arange(actions.shape[0] - 1), actions[1:]]

return pt.sum(logp_actions) # PyMC expects the standard log-likelihood

with pm.Model() as m:

alpha = pm.Beta(name="alpha", alpha=1, beta=1)

beta = pm.HalfNormal(name="beta", sigma=10)

like = pm.Potential(name="like", var=pytensor_llik_td(alpha, beta, actions, rewards))

tr = pm.sample(random_seed=rng)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [alpha, beta]

Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 48 seconds.

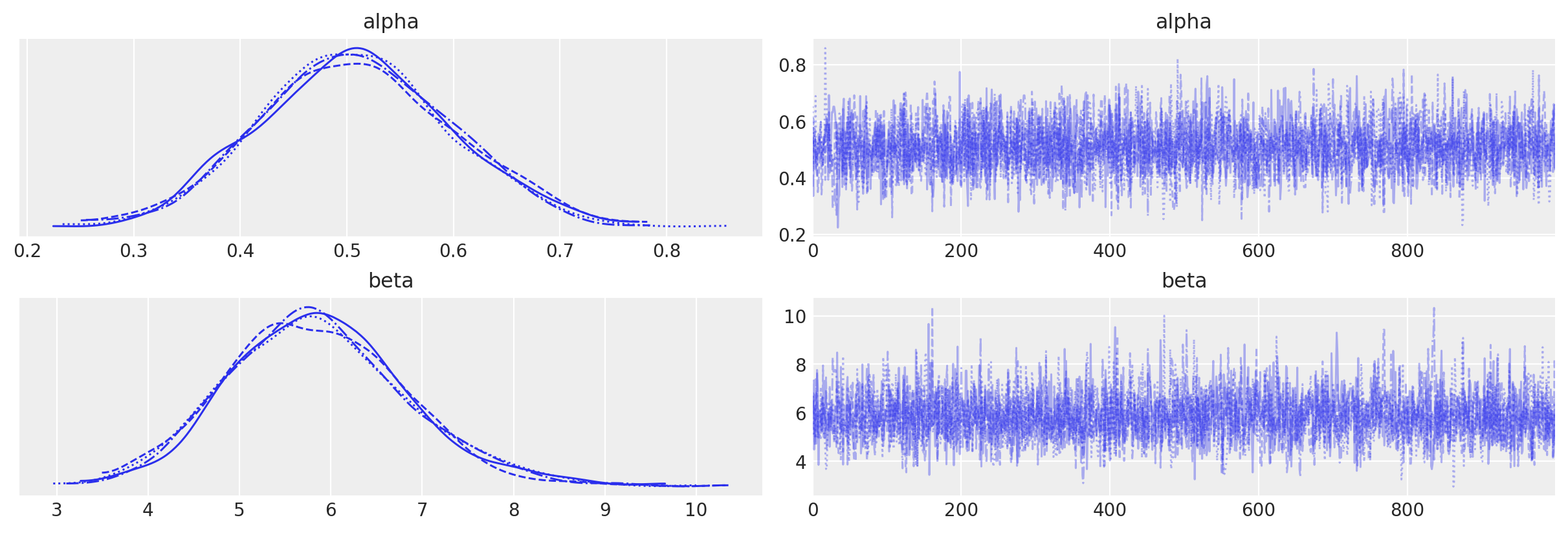

az.plot_trace(data=tr);

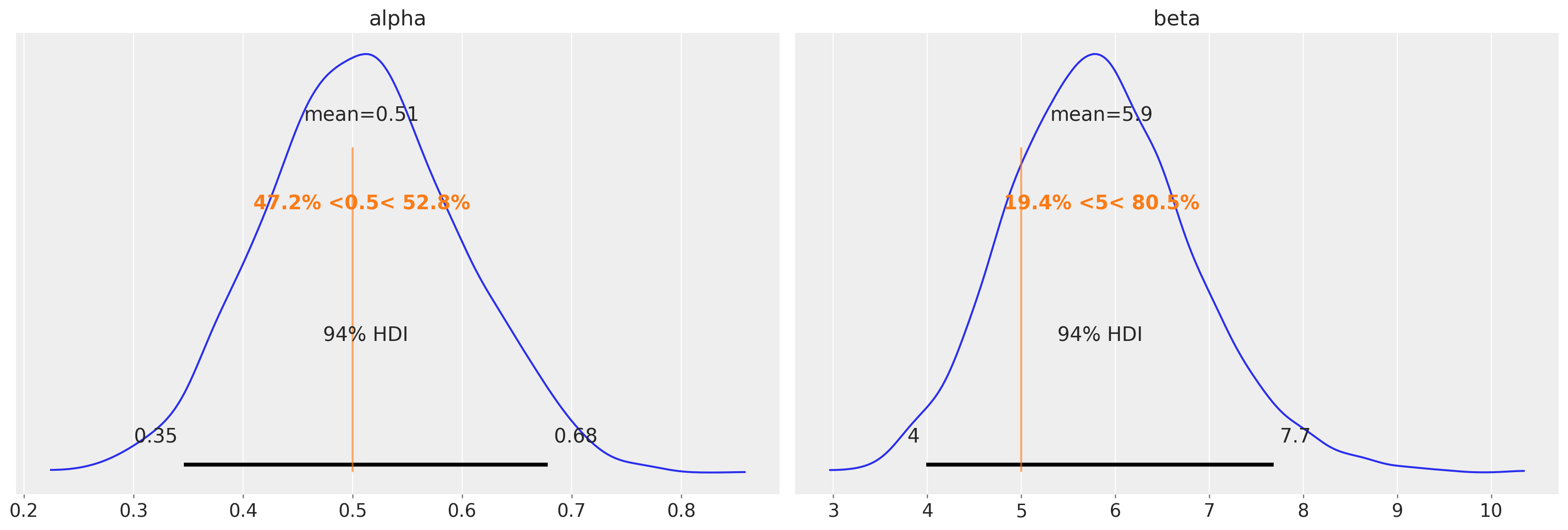

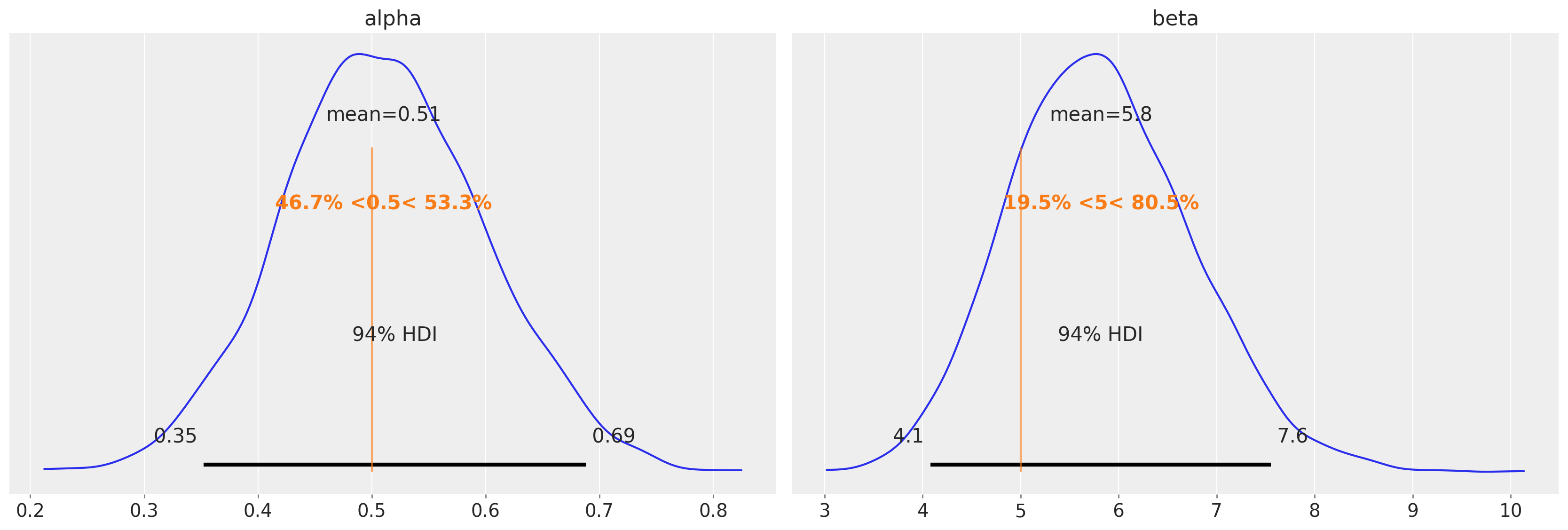

az.plot_posterior(data=tr, ref_val=[true_alpha, true_beta]);

在此示例中,获得的后验值很好地集中在 MLE 值附近。我们获得的是对这些值周围合理不确定性的了解。

使用伯努利分布作为似然函数的替代模型#

在最后一部分中,我提供了一个使用伯努利似然函数的模型的替代实现。

注意

使用伯努利似然函数的一个原因是,然后可以进行先验和后验预测抽样以及模型比较。使用 pm.Potential 你不能这样做,因为 PyMC 不知道什么是似然函数,什么是先验,也不知道如何生成随机抽取。当使用 pm.Bernoulli 似然函数时,这些都不是问题。

def right_action_probs(alpha, beta, actions, rewards):

rewards = pt.as_tensor_variable(rewards, dtype="int32")

actions = pt.as_tensor_variable(actions, dtype="int32")

# Compute the Qs values

Qs = 0.5 * pt.ones((2,), dtype="float64")

Qs, updates = pytensor.scan(

fn=update_Q, sequences=[actions, rewards], outputs_info=[Qs], non_sequences=[alpha]

)

# Apply the sotfmax transformation

Qs = Qs[:-1] * beta

logp_actions = Qs - pt.logsumexp(Qs, axis=1, keepdims=True)

# Return the probabilities for the right action, in the original scale

return pt.exp(logp_actions[:, 1])

with pm.Model() as m_alt:

alpha = pm.Beta(name="alpha", alpha=1, beta=1)

beta = pm.HalfNormal(name="beta", sigma=10)

action_probs = right_action_probs(alpha, beta, actions, rewards)

like = pm.Bernoulli(name="like", p=action_probs, observed=actions[1:])

tr_alt = pm.sample(random_seed=rng)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [alpha, beta]

Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 58 seconds.

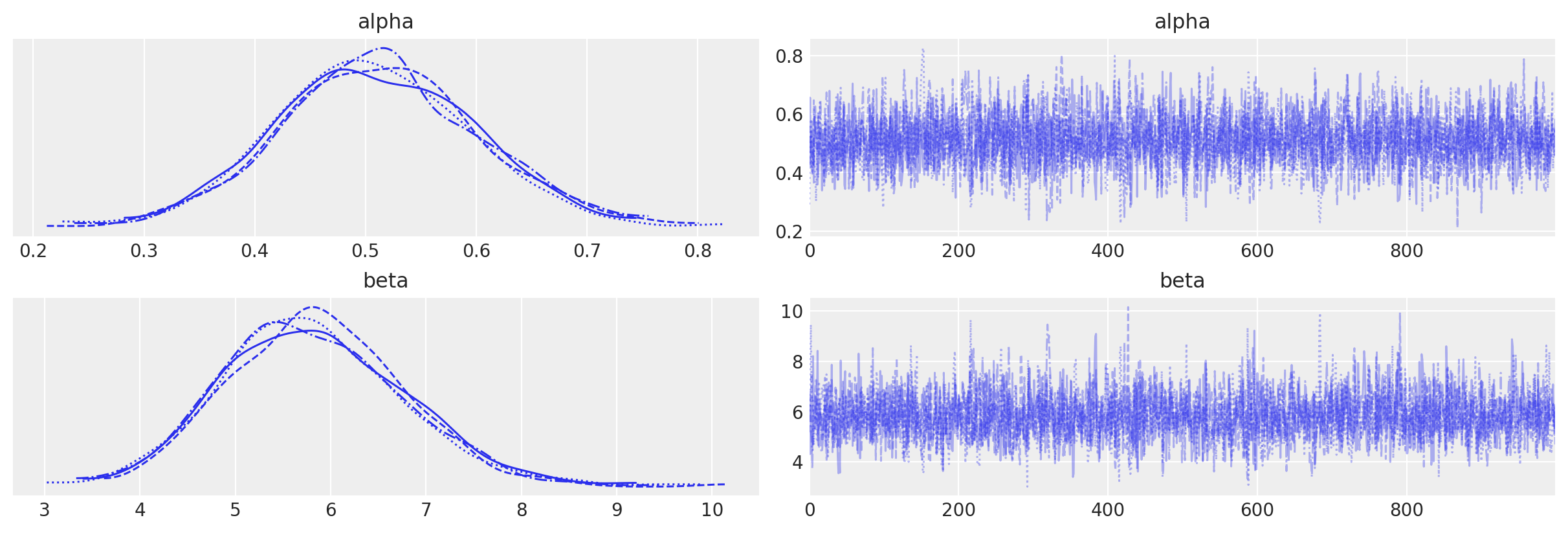

az.plot_trace(data=tr_alt);

az.plot_posterior(data=tr_alt, ref_val=[true_alpha, true_beta]);

水印#

%load_ext watermark

%watermark -n -u -v -iv -w -p aeppl,xarray

Last updated: Thu Aug 18 2022

Python implementation: CPython

Python version : 3.9.13

IPython version : 8.4.0

aeppl : 0.0.34

xarray: 2022.6.0

pytensor : 2.7.9

arviz : 0.12.1

pymc : 4.1.5

sys : 3.9.13 (main, May 24 2022, 21:28:31)

[Clang 13.1.6 (clang-1316.0.21.2)]

scipy : 1.8.1

numpy : 1.22.4

matplotlib: 3.5.3

Watermark: 2.3.1

参考文献#

Robert C Wilson 和 Anne GE Collins。《计算行为数据建模的十条简单规则》。eLife,8:e49547, nov 2019。 doi:10.7554/eLife.49547。

致谢#

由 Ricardo Vieira 于 2022 年 6 月创作

改编自 Maria Eckstein 的 PyMC 代码 (GitHub, PyMC Discourse)

改编自 Robert Wilson 和 Anne Collins [Wilson and Collins, 2019] 的 MLE 代码 (GitHub)

由 Juan Orduz 于 2022 年 8 月重新执行 (pymc-examples#410)

许可声明#

本示例库中的所有笔记本均根据 MIT 许可证提供,该许可证允许修改和再分发以用于任何用途,前提是保留版权和许可声明。

引用 PyMC 示例#

要引用此笔记本,请使用 Zenodo 为 pymc-examples 存储库提供的 DOI。

重要提示

许多笔记本都改编自其他来源:博客、书籍……在这种情况下,您也应该引用原始来源。

还请记住引用您的代码使用的相关库。

这是一个 bibtex 中的引用模板

@incollection{citekey,

author = "<notebook authors, see above>",

title = "<notebook title>",

editor = "PyMC Team",

booktitle = "PyMC examples",

doi = "10.5281/zenodo.5654871"

}

渲染后可能看起来像