贝叶斯向量自回归模型#

import os

import arviz as az

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import pymc as pm

import statsmodels.api as sm

from pymc.sampling_jax import sample_blackjax_nuts

/Users/nathanielforde/mambaforge/envs/myjlabenv/lib/python3.11/site-packages/pymc/sampling/jax.py:39: UserWarning: This module is experimental.

warnings.warn("This module is experimental.")

RANDOM_SEED = 8927

rng = np.random.default_rng(RANDOM_SEED)

az.style.use("arviz-darkgrid")

%config InlineBackend.figure_format = 'retina'

V(ector)A(uto)R(egression) 模型#

在本笔记本中,我们将概述贝叶斯向量自回归模型的应用。我们将借鉴 PYMC Labs 博客文章 中的工作(参见 Vieira [无日期])。这将是一个分为三个部分系列。在第一部分中,我们想展示如何在 PYMC 中拟合贝叶斯 VAR 模型。在第二部分中,我们将展示如何从拟合模型中提取额外的见解,通过脉冲响应分析,并从拟合的 VAR 模型中进行预测。在第三部分也是最后一部分中,我们将更详细地展示使用贝叶斯 VAR 模型的分层先验的好处。具体来说,我们将概述如何以及为什么实际上存在一系列精心制定的行业标准先验,这些先验适用于贝叶斯 VAR 建模。

在这篇文章中,我们将 (i) 在虚假数据上演示简单 VAR 模型的基本模式,并展示模型如何恢复真实数据生成参数,以及 (ii) 我们将展示一个应用于宏观经济数据的示例,并将结果与使用 statsmodels MLE 拟合在相同数据上实现的结果进行比较,以及 (iii) 展示一个估计多个国家的层次贝叶斯 VAR 模型的示例。

通用自回归模型#

简单自回归模型的思想是捕捉时间序列的过去观测值如何预测当前观测值的方式。因此,按照传统方式,如果我们将此建模为线性现象,我们将得到简单的自回归模型,其中当前值由过去值的加权线性组合和误差项预测。

对于预测当前观测值所需的滞后阶数。

VAR 模型是这种框架的一种泛化,因为它保留了线性组合方法,但允许我们一次对多个时间序列进行建模。因此,具体而言,这意味着 \(\mathbf{y}_{t}\) 是一个向量,其中

其中 As 是系数矩阵,用于与每个单独时间序列的过去值组合。例如,考虑一个经济学示例,其中我们旨在对每个变量对自身和他人的关系和相互影响进行建模。

这种结构是使用矩阵符号的紧凑表示。当我们拟合 VAR 模型时,我们试图估计的是确定最适合我们时间序列数据的线性组合性质的 A 矩阵。这种时间序列模型可以具有自回归或移动平均表示,并且细节对于 VAR 模型拟合的一些含义很重要。

我们将在本系列的下一个笔记本中看到,VAR 的移动平均表示如何使其自身适用于将模型中的协方差结构解释为表示组件时间序列之间的一种脉冲响应关系。

具有两个滞后项的具体规范#

矩阵符号对于建议模型的广泛模式很方便,但查看简单情况下的代数很有用。考虑将爱尔兰的 GDP 和消费描述为

通过这种方式,我们可以看到,如果我们能够估计 \(\beta\) 项,我们就得到了每个变量对另一个变量的双向影响的估计。这是建模的一个有用特征。在接下来的内容中,我应该强调我不是经济学家,我的目标只是展示这些模型的功能,而不是给你关于决定爱尔兰 GDP 数字的经济关系的决定性意见。

创建一些虚假数据#

def simulate_var(

intercepts, coefs_yy, coefs_xy, coefs_xx, coefs_yx, noises=(1, 1), *, warmup=100, steps=200

):

draws_y = np.zeros(warmup + steps)

draws_x = np.zeros(warmup + steps)

draws_y[:2] = intercepts[0]

draws_x[:2] = intercepts[1]

for step in range(2, warmup + steps):

draws_y[step] = (

intercepts[0]

+ coefs_yy[0] * draws_y[step - 1]

+ coefs_yy[1] * draws_y[step - 2]

+ coefs_xy[0] * draws_x[step - 1]

+ coefs_xy[1] * draws_x[step - 2]

+ rng.normal(0, noises[0])

)

draws_x[step] = (

intercepts[1]

+ coefs_xx[0] * draws_x[step - 1]

+ coefs_xx[1] * draws_x[step - 2]

+ coefs_yx[0] * draws_y[step - 1]

+ coefs_yx[1] * draws_y[step - 2]

+ rng.normal(0, noises[1])

)

return draws_y[warmup:], draws_x[warmup:]

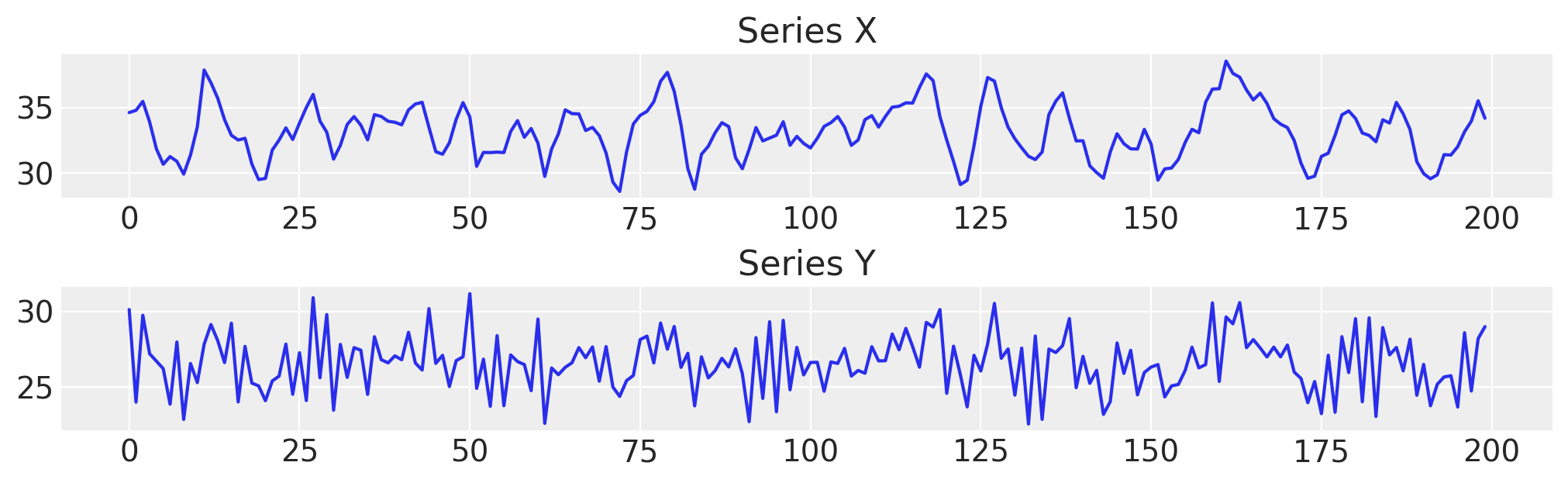

首先,我们生成一些具有已知参数的虚假数据。

var_y, var_x = simulate_var(

intercepts=(18, 8),

coefs_yy=(-0.8, 0),

coefs_xy=(0.9, 0),

coefs_xx=(1.3, -0.7),

coefs_yx=(-0.1, 0.3),

)

df = pd.DataFrame({"x": var_x, "y": var_y})

df.head()

| x | y | |

|---|---|---|

| 0 | 34.606613 | 30.117581 |

| 1 | 34.773803 | 23.996700 |

| 2 | 35.455237 | 29.738941 |

| 3 | 33.886706 | 27.193417 |

| 4 | 31.837465 | 26.704728 |

fig, axs = plt.subplots(2, 1, figsize=(10, 3))

axs[0].plot(df["x"], label="x")

axs[0].set_title("Series X")

axs[1].plot(df["y"], label="y")

axs[1].set_title("Series Y");

处理多个滞后和不同维度#

当对多个时间序列进行建模并考虑可能需要在模型中包含的任意数量的滞后时,我们需要将一些模型定义抽象到辅助函数中。一个例子将使这一点更清楚一些。

### Define a helper function that will construct our autoregressive step for the marginal contribution of each lagged

### term in each of the respective time series equations

def calc_ar_step(lag_coefs, n_eqs, n_lags, df):

ars = []

for j in range(n_eqs):

ar = pm.math.sum(

[

pm.math.sum(lag_coefs[j, i] * df.values[n_lags - (i + 1) : -(i + 1)], axis=-1)

for i in range(n_lags)

],

axis=0,

)

ars.append(ar)

beta = pm.math.stack(ars, axis=-1)

return beta

### Make the model in such a way that it can handle different specifications of the likelihood term

### and can be run for simple prior predictive checks. This latter functionality is important for debugging of

### shape handling issues. Building a VAR model involves quite a few moving parts and it is handy to

### inspect the shape implied in the prior predictive checks.

def make_model(n_lags, n_eqs, df, priors, mv_norm=True, prior_checks=True):

coords = {

"lags": np.arange(n_lags) + 1,

"equations": df.columns.tolist(),

"cross_vars": df.columns.tolist(),

"time": [x for x in df.index[n_lags:]],

}

with pm.Model(coords=coords) as model:

lag_coefs = pm.Normal(

"lag_coefs",

mu=priors["lag_coefs"]["mu"],

sigma=priors["lag_coefs"]["sigma"],

dims=["equations", "lags", "cross_vars"],

)

alpha = pm.Normal(

"alpha", mu=priors["alpha"]["mu"], sigma=priors["alpha"]["sigma"], dims=("equations",)

)

data_obs = pm.Data("data_obs", df.values[n_lags:], dims=["time", "equations"], mutable=True)

betaX = calc_ar_step(lag_coefs, n_eqs, n_lags, df)

betaX = pm.Deterministic(

"betaX",

betaX,

dims=[

"time",

],

)

mean = alpha + betaX

if mv_norm:

n = df.shape[1]

## Under the hood the LKJ prior will retain the correlation matrix too.

noise_chol, _, _ = pm.LKJCholeskyCov(

"noise_chol",

eta=priors["noise_chol"]["eta"],

n=n,

sd_dist=pm.HalfNormal.dist(sigma=priors["noise_chol"]["sigma"]),

)

obs = pm.MvNormal(

"obs", mu=mean, chol=noise_chol, observed=data_obs, dims=["time", "equations"]

)

else:

## This is an alternative likelihood that can recover sensible estimates of the coefficients

## But lacks the multivariate correlation between the timeseries.

sigma = pm.HalfNormal("noise", sigma=priors["noise"]["sigma"], dims=["equations"])

obs = pm.Normal(

"obs", mu=mean, sigma=sigma, observed=data_obs, dims=["time", "equations"]

)

if prior_checks:

idata = pm.sample_prior_predictive()

return model, idata

else:

idata = pm.sample_prior_predictive()

idata.extend(pm.sample(draws=2000, random_seed=130))

pm.sample_posterior_predictive(idata, extend_inferencedata=True, random_seed=rng)

return model, idata

该模型在自回归计算中具有确定性成分,这是每个时间步都需要的,但这里的关键点是我们将 VAR 的似然性建模为具有特定协方差关系的多元正态分布。这些协方差关系的估计给出了关于我们的组件时间序列彼此相关的方式的主要见解。

我们将检查具有 2 个滞后和 2 个方程的 VAR 的结构

n_lags = 2

n_eqs = 2

priors = {

"lag_coefs": {"mu": 0.3, "sigma": 1},

"alpha": {"mu": 15, "sigma": 5},

"noise_chol": {"eta": 1, "sigma": 1},

"noise": {"sigma": 1},

}

model, idata = make_model(n_lags, n_eqs, df, priors)

pm.model_to_graphviz(model)

Sampling: [alpha, lag_coefs, noise_chol, obs]

另一个具有 3 个滞后和 2 个方程的 VAR。

n_lags = 3

n_eqs = 2

model, idata = make_model(n_lags, n_eqs, df, priors)

for rv, shape in model.eval_rv_shapes().items():

print(f"{rv:>11}: shape={shape}")

pm.model_to_graphviz(model)

Sampling: [alpha, lag_coefs, noise_chol, obs]

lag_coefs: shape=(2, 3, 2)

alpha: shape=(2,)

noise_chol_cholesky-cov-packed__: shape=(3,)

noise_chol: shape=(3,)

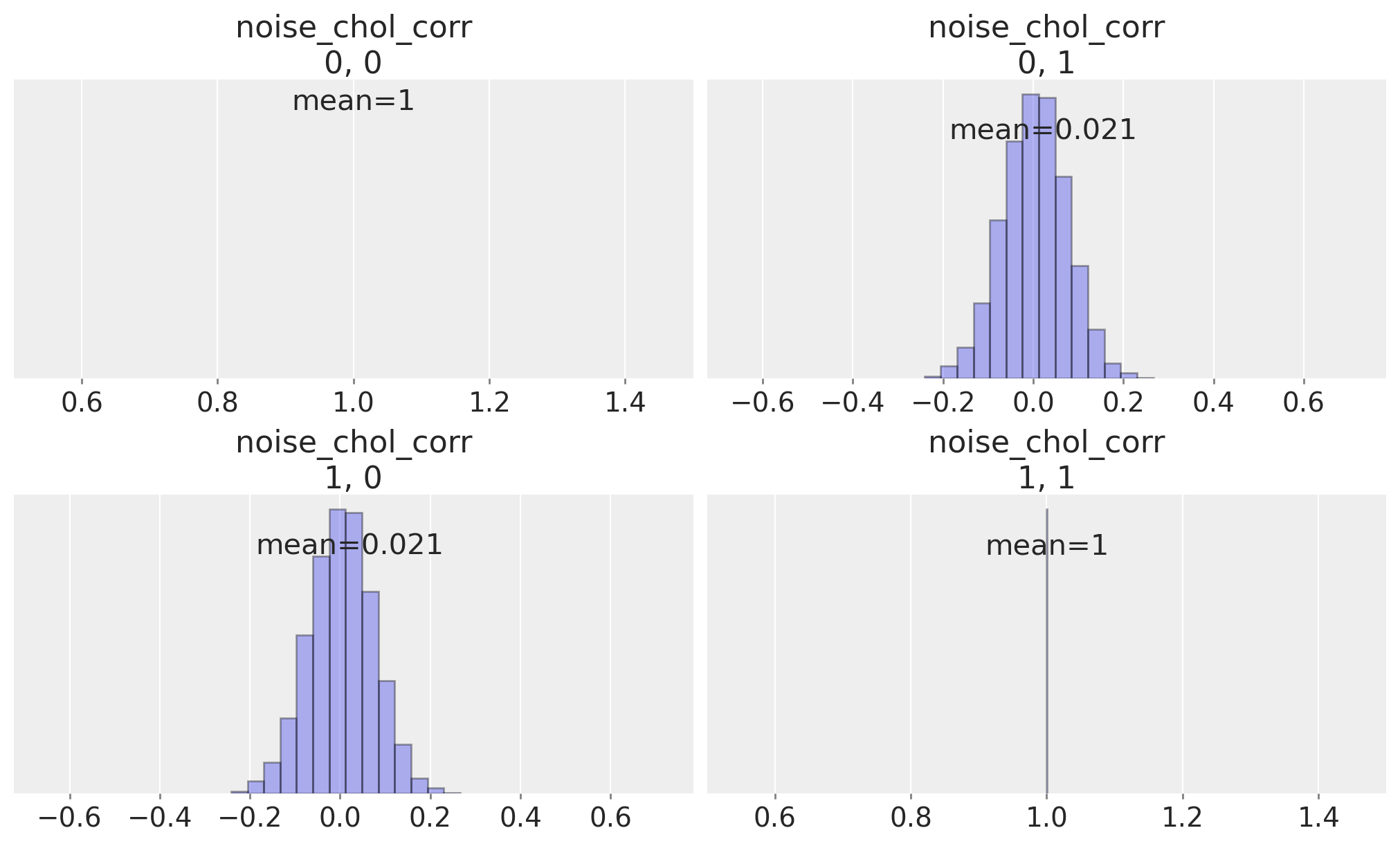

我们可以检查时间序列之间的相关矩阵,该矩阵由先验规范暗示,以查看我们是否允许了它们相关性的平坦均匀先验。

ax = az.plot_posterior(

idata,

var_names="noise_chol_corr",

hdi_prob="hide",

group="prior",

point_estimate="mean",

grid=(2, 2),

kind="hist",

ec="black",

figsize=(10, 4),

)

现在我们将拟合具有 2 个滞后和 2 个方程的 VAR

n_lags = 2

n_eqs = 2

model, idata_fake_data = make_model(n_lags, n_eqs, df, priors, prior_checks=False)

Sampling: [alpha, lag_coefs, noise_chol, obs]

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [lag_coefs, alpha, noise_chol]

Sampling 4 chains for 1_000 tune and 2_000 draw iterations (4_000 + 8_000 draws total) took 360 seconds.

Sampling: [obs]

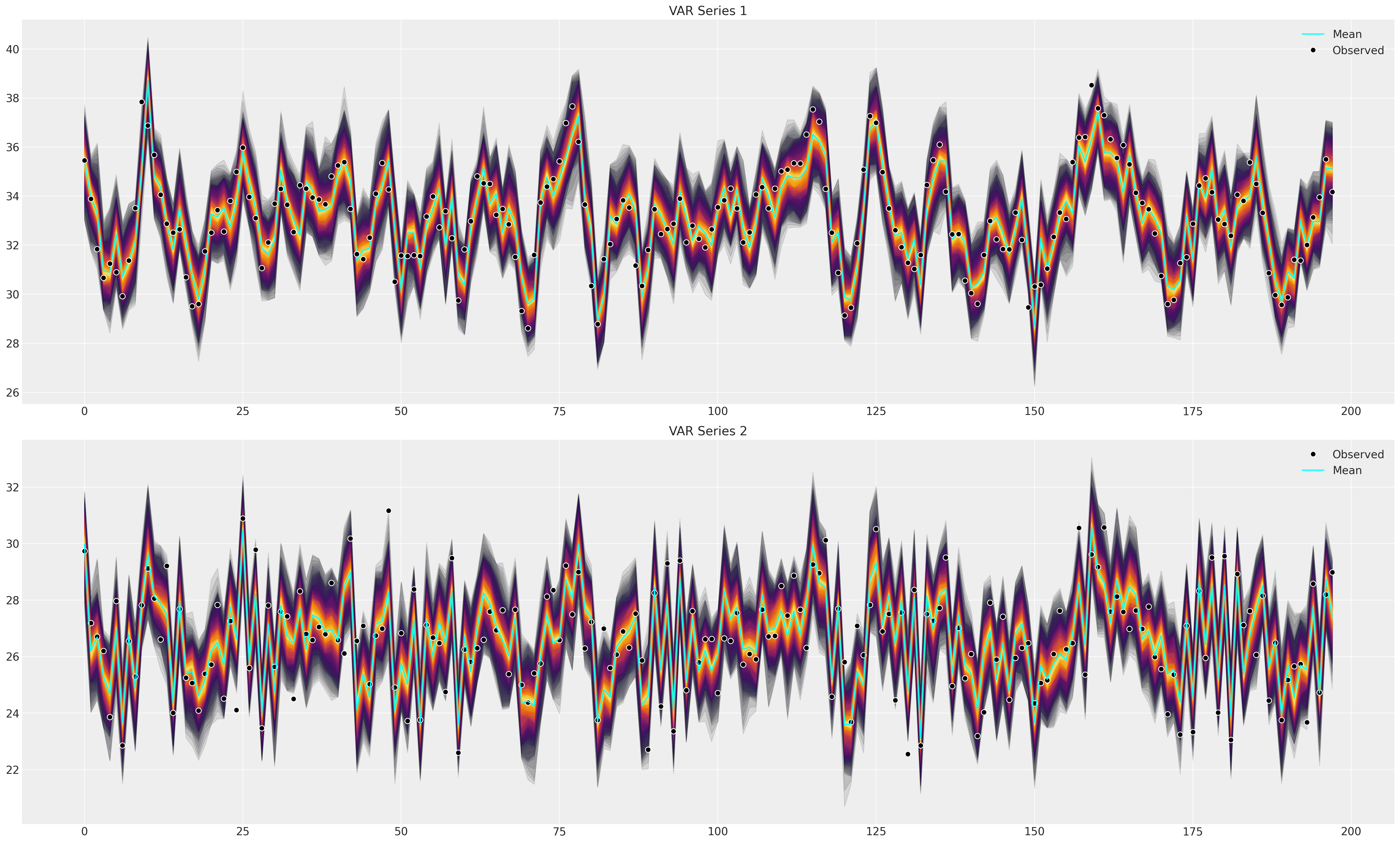

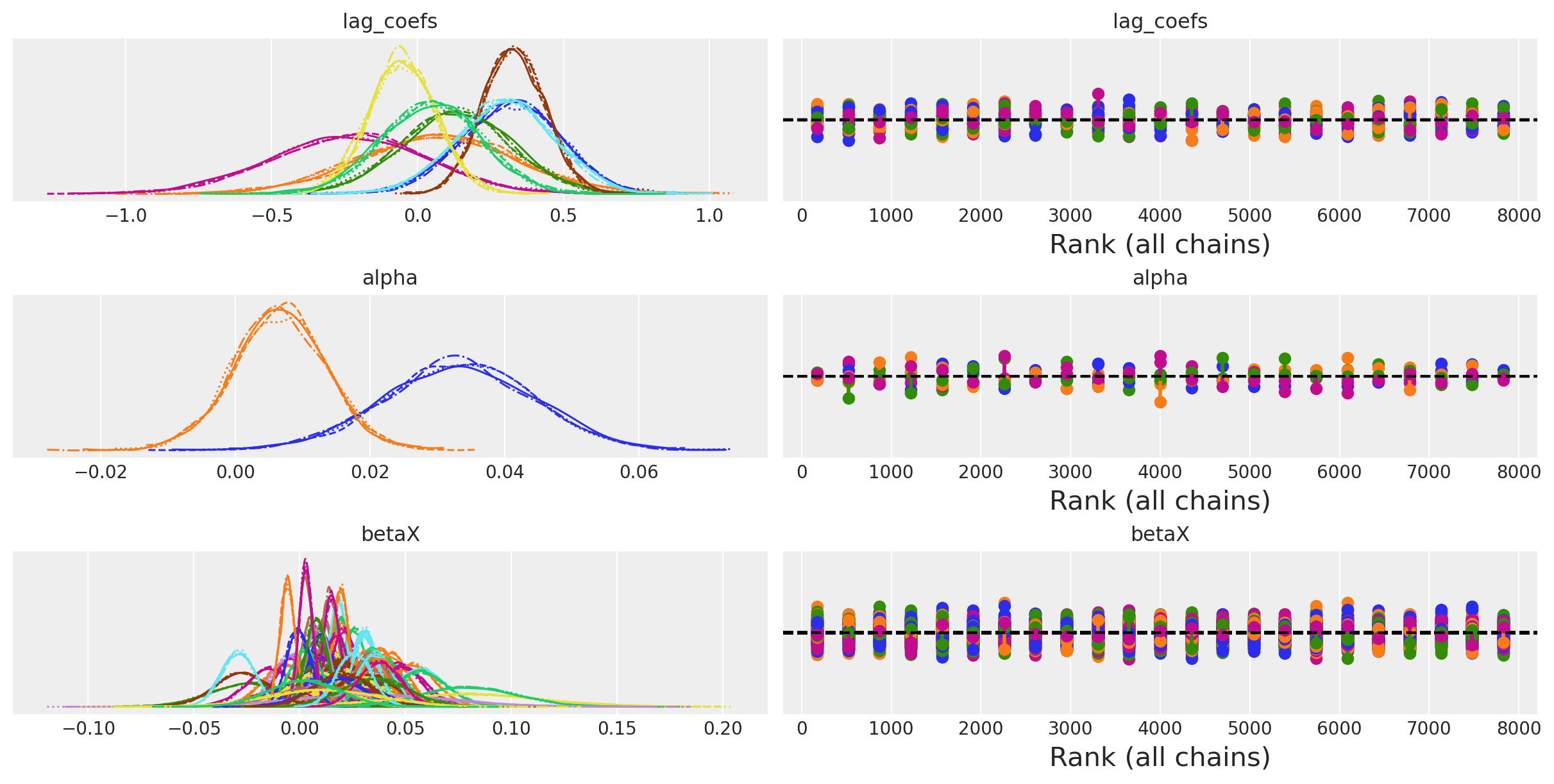

我们现在将绘制一些结果,以查看参数是否被大致恢复。alpha 参数匹配良好,但各个滞后系数显示出差异。

az.summary(idata_fake_data, var_names=["alpha", "lag_coefs", "noise_chol_corr"])

/Users/nathanielforde/mambaforge/envs/myjlabenv/lib/python3.11/site-packages/arviz/stats/diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

| 均值 | 标准差 | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| alpha[x] | 8.607 | 1.765 | 5.380 | 12.076 | 0.029 | 0.020 | 3823.0 | 4602.0 | 1.0 |

| alpha[y] | 17.094 | 1.778 | 13.750 | 20.431 | 0.027 | 0.019 | 4188.0 | 5182.0 | 1.0 |

| lag_coefs[x, 1, x] | 1.333 | 0.062 | 1.218 | 1.450 | 0.001 | 0.001 | 5564.0 | 4850.0 | 1.0 |

| lag_coefs[x, 1, y] | -0.120 | 0.069 | -0.247 | 0.011 | 0.001 | 0.001 | 3739.0 | 4503.0 | 1.0 |

| lag_coefs[x, 2, x] | -0.711 | 0.097 | -0.890 | -0.527 | 0.002 | 0.001 | 3629.0 | 4312.0 | 1.0 |

| lag_coefs[x, 2, y] | 0.267 | 0.073 | 0.126 | 0.403 | 0.001 | 0.001 | 3408.0 | 4318.0 | 1.0 |

| lag_coefs[y, 1, x] | 0.838 | 0.061 | 0.718 | 0.948 | 0.001 | 0.001 | 5203.0 | 5345.0 | 1.0 |

| lag_coefs[y, 1, y] | -0.800 | 0.069 | -0.932 | -0.673 | 0.001 | 0.001 | 3749.0 | 5131.0 | 1.0 |

| lag_coefs[y, 2, x] | 0.094 | 0.097 | -0.087 | 0.277 | 0.002 | 0.001 | 3573.0 | 4564.0 | 1.0 |

| lag_coefs[y, 2, y] | -0.004 | 0.074 | -0.145 | 0.133 | 0.001 | 0.001 | 3448.0 | 4484.0 | 1.0 |

| noise_chol_corr[0, 0] | 1.000 | 0.000 | 1.000 | 1.000 | 0.000 | 0.000 | 8000.0 | 8000.0 | NaN |

| noise_chol_corr[0, 1] | 0.021 | 0.072 | -0.118 | 0.152 | 0.001 | 0.001 | 6826.0 | 5061.0 | 1.0 |

| noise_chol_corr[1, 0] | 0.021 | 0.072 | -0.118 | 0.152 | 0.001 | 0.001 | 6826.0 | 5061.0 | 1.0 |

| noise_chol_corr[1, 1] | 1.000 | 0.000 | 1.000 | 1.000 | 0.000 | 0.000 | 7813.0 | 7824.0 | 1.0 |

az.plot_posterior(idata_fake_data, var_names=["alpha"], ref_val=[8, 18]);

接下来,我们将绘制后验预测分布,以检查拟合模型是否可以捕捉到观测数据中的模式。这是拟合优度的主要测试。

def shade_background(ppc, ax, idx, palette="cividis"):

palette = palette

cmap = plt.get_cmap(palette)

percs = np.linspace(51, 99, 100)

colors = (percs - np.min(percs)) / (np.max(percs) - np.min(percs))

for i, p in enumerate(percs[::-1]):

upper = np.percentile(

ppc[:, idx, :],

p,

axis=1,

)

lower = np.percentile(

ppc[:, idx, :],

100 - p,

axis=1,

)

color_val = colors[i]

ax[idx].fill_between(

x=np.arange(ppc.shape[0]),

y1=upper.flatten(),

y2=lower.flatten(),

color=cmap(color_val),

alpha=0.1,

)

def plot_ppc(idata, df, group="posterior_predictive"):

fig, axs = plt.subplots(2, 1, figsize=(25, 15))

df = pd.DataFrame(idata_fake_data["observed_data"]["obs"].data, columns=["x", "y"])

axs = axs.flatten()

ppc = az.extract(idata, group=group, num_samples=100)["obs"]

# Minus the lagged terms and the constant

shade_background(ppc, axs, 0, "inferno")

axs[0].plot(np.arange(ppc.shape[0]), ppc[:, 0, :].mean(axis=1), color="cyan", label="Mean")

axs[0].plot(df["x"], "o", mfc="black", mec="white", mew=1, markersize=7, label="Observed")

axs[0].set_title("VAR Series 1")

axs[0].legend()

shade_background(ppc, axs, 1, "inferno")

axs[1].plot(df["y"], "o", mfc="black", mec="white", mew=1, markersize=7, label="Observed")

axs[1].plot(np.arange(ppc.shape[0]), ppc[:, 1, :].mean(axis=1), color="cyan", label="Mean")

axs[1].set_title("VAR Series 2")

axs[1].legend()

plot_ppc(idata_fake_data, df)

再次,我们可以检查相关参数的学习后验分布。

ax = az.plot_posterior(

idata_fake_data,

var_names="noise_chol_corr",

hdi_prob="hide",

point_estimate="mean",

grid=(2, 2),

kind="hist",

ec="black",

figsize=(10, 6),

)

应用理论:宏观经济时间序列#

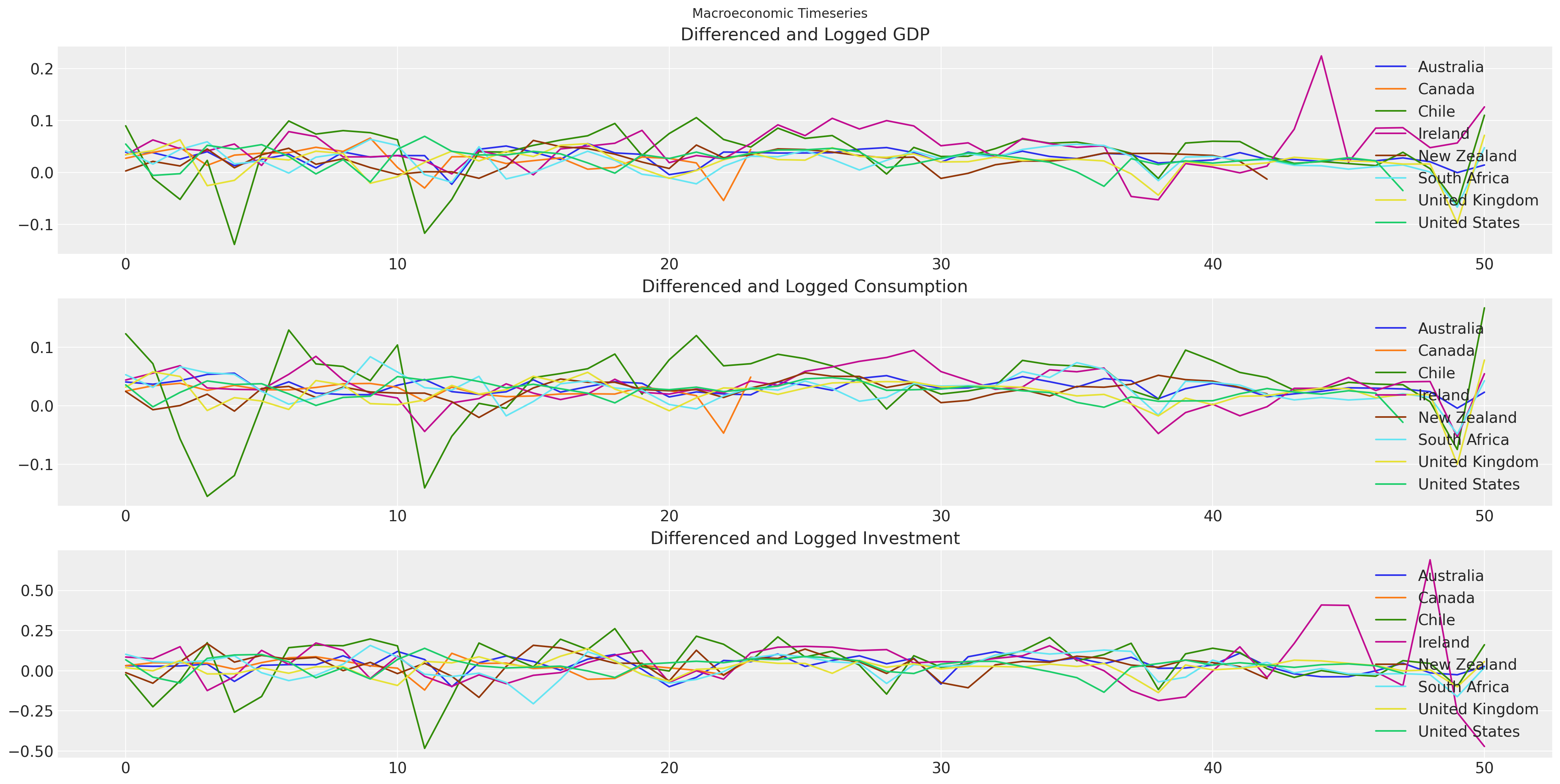

数据来自世界银行的世界发展指标。特别是,我们正在提取 1970 年以来所有国家的 GDP、消费和固定资本形成总额(投资)的年度值。时间序列模型通常在整个序列中具有稳定的均值时效果最佳,因此对于估计过程,我们采用了这些序列的一阶差分和自然对数。

try:

gdp_hierarchical = pd.read_csv(

os.path.join("..", "data", "gdp_data_hierarchical_clean.csv"), index_col=0

)

except FileNotFoundError:

gdp_hierarchical = pd.read_csv(pm.get_data("gdp_data_hierarchical_clean.csv"), ...)

gdp_hierarchical

| 国家 | iso2c | iso3c | 年份 | GDP | CONS | GFCF | dl_gdp | dl_cons | dl_gfcf | more_than_10 | 时间 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 澳大利亚 | AU | AUS | 1971 | 4.647670e+11 | 3.113170e+11 | 7.985100e+10 | 0.039217 | 0.040606 | 0.031705 | True | 1 |

| 2 | 澳大利亚 | AU | AUS | 1972 | 4.829350e+11 | 3.229650e+11 | 8.209200e+10 | 0.038346 | 0.036732 | 0.027678 | True | 2 |

| 3 | 澳大利亚 | AU | AUS | 1973 | 4.955840e+11 | 3.371070e+11 | 8.460300e+10 | 0.025855 | 0.042856 | 0.030129 | True | 3 |

| 4 | 澳大利亚 | AU | AUS | 1974 | 5.159300e+11 | 3.556010e+11 | 8.821400e+10 | 0.040234 | 0.053409 | 0.041796 | True | 4 |

| 5 | 澳大利亚 | AU | AUS | 1975 | 5.228210e+11 | 3.759000e+11 | 8.255900e+10 | 0.013268 | 0.055514 | -0.066252 | True | 5 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 366 | 美国 | US | USA | 2016 | 1.850960e+13 | 1.522497e+13 | 3.802207e+12 | 0.016537 | 0.023425 | 0.021058 | True | 44 |

| 367 | 美国 | US | USA | 2017 | 1.892712e+13 | 1.553075e+13 | 3.947418e+12 | 0.022306 | 0.019885 | 0.037480 | True | 45 |

| 368 | 美国 | US | USA | 2018 | 1.947957e+13 | 1.593427e+13 | 4.119951e+12 | 0.028771 | 0.025650 | 0.042780 | True | 46 |

| 369 | 美国 | US | USA | 2019 | 1.992544e+13 | 1.627888e+13 | 4.248643e+12 | 0.022631 | 0.021396 | 0.030758 | True | 47 |

| 370 | 美国 | US | USA | 2020 | 1.924706e+13 | 1.582501e+13 | 4.182801e+12 | -0.034639 | -0.028277 | -0.015619 | True | 48 |

370 行 × 12 列

fig, axs = plt.subplots(3, 1, figsize=(20, 10))

for country in gdp_hierarchical["country"].unique():

temp = gdp_hierarchical[gdp_hierarchical["country"] == country].reset_index()

axs[0].plot(temp["dl_gdp"], label=f"{country}")

axs[1].plot(temp["dl_cons"], label=f"{country}")

axs[2].plot(temp["dl_gfcf"], label=f"{country}")

axs[0].set_title("Differenced and Logged GDP")

axs[1].set_title("Differenced and Logged Consumption")

axs[2].set_title("Differenced and Logged Investment")

axs[0].legend()

axs[1].legend()

axs[2].legend()

plt.suptitle("Macroeconomic Timeseries");

爱尔兰的经济状况#

爱尔兰因其 GDP 数字而臭名昭著,这些数字在很大程度上是外国直接投资的产物,并且近年来由于向大型跨国公司提供的投资和税收协议而被夸大超出预期。我们将在此处仅关注 GDP 和消费之间的关系。我们只想展示 VAR 估计的机制,您不应过多解读随后的分析。

ireland_df = gdp_hierarchical[gdp_hierarchical["country"] == "Ireland"]

ireland_df.reset_index(inplace=True, drop=True)

ireland_df.head()

| 国家 | iso2c | iso3c | 年份 | GDP | CONS | GFCF | dl_gdp | dl_cons | dl_gfcf | more_than_10 | 时间 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 爱尔兰 | IE | IRL | 1971 | 3.314234e+10 | 2.897699e+10 | 8.317518e+09 | 0.034110 | 0.043898 | 0.085452 | True | 1 |

| 1 | 爱尔兰 | IE | IRL | 1972 | 3.529322e+10 | 3.063538e+10 | 8.967782e+09 | 0.062879 | 0.055654 | 0.075274 | True | 2 |

| 2 | 爱尔兰 | IE | IRL | 1973 | 3.695956e+10 | 3.280221e+10 | 1.041728e+10 | 0.046134 | 0.068340 | 0.149828 | True | 3 |

| 3 | 爱尔兰 | IE | IRL | 1974 | 3.853412e+10 | 3.381524e+10 | 9.207243e+09 | 0.041720 | 0.030416 | -0.123476 | True | 4 |

| 4 | 爱尔兰 | IE | IRL | 1975 | 4.071386e+10 | 3.477232e+10 | 8.874887e+09 | 0.055024 | 0.027910 | -0.036765 | True | 5 |

n_lags = 2

n_eqs = 2

priors = {

## Set prior for expected positive relationship between the variables.

"lag_coefs": {"mu": 0.3, "sigma": 1},

"alpha": {"mu": 0, "sigma": 0.1},

"noise_chol": {"eta": 1, "sigma": 1},

"noise": {"sigma": 1},

}

model, idata_ireland = make_model(

n_lags, n_eqs, ireland_df[["dl_gdp", "dl_cons"]], priors, prior_checks=False

)

idata_ireland

Sampling: [alpha, lag_coefs, noise_chol, obs]

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [lag_coefs, alpha, noise_chol]

Sampling 4 chains for 1_000 tune and 2_000 draw iterations (4_000 + 8_000 draws total) took 36 seconds.

Sampling: [obs]

-

- chain: 4

- draw: 2000

- equations: 2

- lags: 2

- cross_vars: 2

- noise_chol_dim_0: 3

- 时间: 49

- betaX_dim_1: 2

- noise_chol_corr_dim_0: 2

- noise_chol_corr_dim_1: 2

- noise_chol_stds_dim_0: 2

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 ... 1996 1997 1998 1999

array([ 0, 1, 2, ..., 1997, 1998, 1999])

- equations(equations)<U7'dl_gdp' 'dl_cons'

array(['dl_gdp', 'dl_cons'], dtype='<U7')

- lags(lags)int641 2

array([1, 2])

- cross_vars(cross_vars)<U7'dl_gdp' 'dl_cons'

array(['dl_gdp', 'dl_cons'], dtype='<U7')

- noise_chol_dim_0(noise_chol_dim_0)int640 1 2

array([0, 1, 2])

- 时间(time)int642 3 4 5 6 7 8 ... 45 46 47 48 49 50

array([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - betaX_dim_1(betaX_dim_1)int640 1

array([0, 1])

- noise_chol_corr_dim_0(noise_chol_corr_dim_0)int640 1

array([0, 1])

- noise_chol_corr_dim_1(noise_chol_corr_dim_1)int640 1

array([0, 1])

- noise_chol_stds_dim_0(noise_chol_stds_dim_0)int640 1

array([0, 1])

- lag_coefs(chain, draw, equations, lags, cross_vars)float640.5197 0.07935 ... -0.2045 0.235

array([[[[[ 5.19656576e-01, 7.93542008e-02], [ 2.61607049e-01, -1.72153516e-01]], [[ 3.58280690e-01, 4.69430533e-01], [-1.41598877e-02, 7.07116179e-02]]], [[[-4.16577591e-02, 4.06592634e-01], [ 3.42706574e-01, -5.74590855e-01]], [[ 3.79750238e-01, 3.33743286e-01], [-1.00207466e-01, 1.43191909e-01]]], [[[ 5.15113761e-01, 5.43954306e-02], [ 2.24354001e-01, -1.25071225e-01]], [[ 3.35089469e-01, 3.15187746e-01], [ 8.77271295e-05, 1.81655203e-01]]], ... [[[ 2.78892253e-01, 2.13837349e-01], [ 2.60725950e-01, -1.27187687e-01]], [[ 3.84079652e-01, 9.48802792e-02], [-2.23869617e-02, 2.29483840e-01]]], [[[ 6.31173009e-01, -5.28215508e-01], [-1.55673631e-01, 3.61610751e-02]], [[ 3.27117933e-01, 4.42098735e-01], [-1.36438735e-01, -5.36320280e-03]]], [[[ 4.27130277e-01, -2.91019063e-01], [ 2.63469903e-02, 2.47138008e-01]], [[ 4.50003809e-01, 7.52912358e-02], [-2.04480247e-01, 2.35021007e-01]]]]]) - alpha(chain, draw, equations)float640.01472 -0.001526 ... 0.01042

array([[[ 0.01471676, -0.00152568], [ 0.0441433 , 0.00203566], [ 0.01079889, -0.00421006], ..., [ 0.01721974, 0.00564757], [ 0.04886959, 0.00799192], [ 0.01667415, 0.00371018]], [[ 0.04548506, 0.01127504], [ 0.04432491, -0.00028508], [ 0.03517707, 0.01830341], ..., [ 0.01805819, -0.00392762], [ 0.05267103, 0.02336937], [ 0.04478405, 0.01691428]], [[ 0.03111884, 0.00949071], [ 0.04501899, 0.00520666], [ 0.03759947, 0.00563301], ..., [ 0.02761799, 0.00727374], [ 0.04597957, 0.01626677], [ 0.05225605, 0.01325381]], [[ 0.0365445 , 0.00588842], [ 0.05292467, 0.01589702], [ 0.05850131, 0.00710232], ..., [ 0.03053105, 0.00836573], [ 0.02544402, 0.00399799], [ 0.03312685, 0.01041744]]]) - noise_chol(chain, draw, noise_chol_dim_0)float640.04206 0.01468 ... 0.01262 0.02424

array([[[0.04205913, 0.01467698, 0.02638489], [0.03959323, 0.00462671, 0.02231385], [0.03619061, 0.0115924 , 0.02253414], ..., [0.04634195, 0.01092772, 0.02286984], [0.04250194, 0.01037661, 0.02405165], [0.04079687, 0.00585382, 0.02524456]], [[0.04310706, 0.00775202, 0.02517299], [0.04330754, 0.01079034, 0.02413016], [0.03938486, 0.01259796, 0.02279358], ..., [0.04874519, 0.01286422, 0.02864554], [0.04704716, 0.01101798, 0.02170003], [0.04075767, 0.001405 , 0.02298114]], [[0.04082337, 0.00868785, 0.0205891 ], [0.04190526, 0.0087839 , 0.02398674], [0.0479445 , 0.01780939, 0.0228716 ], ..., [0.04367215, 0.00381667, 0.02414608], [0.04618374, 0.00985851, 0.0241093 ], [0.04771735, 0.01976765, 0.02301084]], [[0.03937039, 0.01452327, 0.02261826], [0.04017701, 0.00773141, 0.01975944], [0.04501176, 0.00872818, 0.01975194], ..., [0.04350486, 0.00854354, 0.02546362], [0.04543641, 0.01214901, 0.023637 ], [0.04664034, 0.01261794, 0.02423548]]]) - betaX(chain, draw, time, betaX_dim_1)float640.03846 0.05127 ... 0.05137 0.02155

array([[[[ 0.03845828, 0.05127494], [ 0.03626535, 0.0516547 ], [ 0.02439747, 0.03340475], ..., [ 0.06606994, 0.05073041], [ 0.04383074, 0.03827857], [ 0.03082664, -0.00237368]], [[ 0.0064756 , 0.04532004], [ 0.01543582, 0.04199548], [-0.0128284 , 0.03115703], ..., [ 0.027321 , 0.04158131], [ 0.02104586, 0.02920793], [-0.03139562, 0.00495901]], [[ 0.03757968, 0.04658869], [ 0.03462795, 0.04711412], [ 0.0249478 , 0.03598497], ..., ... ..., [ 0.05183073, 0.04113899], [ 0.03959469, 0.02977498], [ 0.01174284, 0.02525658]], [[ 0.00656781, 0.04028385], [-0.01475617, 0.03642657], [ 0.00555572, 0.0204332 ], ..., [ 0.02066245, 0.03446825], [-0.00354691, 0.02198112], [ 0.05819251, -0.01170932]], [[ 0.02240881, 0.03582803], [ 0.01522756, 0.02612792], [ 0.0270731 , 0.02769207], ..., [ 0.03377353, 0.03059268], [ 0.0208055 , 0.01661153], [ 0.05137065, 0.02154906]]]]) - noise_chol_corr(chain, draw, noise_chol_corr_dim_0, noise_chol_corr_dim_1)float641.0 0.4861 0.4861 ... 0.4618 1.0

array([[[[1. , 0.48611626], [0.48611626, 1. ]], [[1. , 0.20302855], [0.20302855, 1. ]], [[1. , 0.45745439], [0.45745439, 1. ]], ..., [[1. , 0.43113357], [0.43113357, 1. ]], [[1. , 0.39613595], [0.39613595, 1. ]], [[1. , 0.22589091], [0.22589091, 1. ]]], ... [[[1. , 0.54030892], [0.54030892, 1. ]], [[1. , 0.36437699], [0.36437699, 1. ]], [[1. , 0.40418614], [0.40418614, 1. ]], ..., [[1. , 0.31809258], [0.31809258, 1. ]], [[1. , 0.45713496], [0.45713496, 1. ]], [[1. , 0.46179879], [0.46179879, 1. ]]]]) - noise_chol_stds(chain, draw, noise_chol_stds_dim_0)float640.04206 0.03019 ... 0.04664 0.02732

array([[[0.04205913, 0.03019232], [0.03959323, 0.02278847], [0.03619061, 0.0253411 ], ..., [0.04634195, 0.02534649], [0.04250194, 0.02619458], [0.04079687, 0.02591438]], [[0.04310706, 0.02633958], [0.04330754, 0.02643286], [0.03938486, 0.02604334], ..., [0.04874519, 0.03140152], [0.04704716, 0.02433695], [0.04075767, 0.02302405]], [[0.04082337, 0.02234703], [0.04190526, 0.02554449], [0.0479445 , 0.02898766], ..., [0.04367215, 0.02444586], [0.04618374, 0.02604704], [0.04771735, 0.03033577]], [[0.03937039, 0.02687956], [0.04017701, 0.02121816], [0.04501176, 0.02159444], ..., [0.04350486, 0.02685867], [0.04543641, 0.02657642], [0.04664034, 0.02732345]]])

- chainPandasIndex

PandasIndex(Int64Index([0, 1, 2, 3], dtype='int64', name='chain'))

- drawPandasIndex

PandasIndex(Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, ... 1990, 1991, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999], dtype='int64', name='draw', length=2000)) - equationsPandasIndex

PandasIndex(Index(['dl_gdp', 'dl_cons'], dtype='object', name='equations'))

- lagsPandasIndex

PandasIndex(Int64Index([1, 2], dtype='int64', name='lags'))

- cross_varsPandasIndex

PandasIndex(Index(['dl_gdp', 'dl_cons'], dtype='object', name='cross_vars'))

- noise_chol_dim_0PandasIndex

PandasIndex(Int64Index([0, 1, 2], dtype='int64', name='noise_chol_dim_0'))

- 时间PandasIndex

PandasIndex(Int64Index([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50], dtype='int64', name='time')) - betaX_dim_1PandasIndex

PandasIndex(Int64Index([0, 1], dtype='int64', name='betaX_dim_1'))

- noise_chol_corr_dim_0PandasIndex

PandasIndex(Int64Index([0, 1], dtype='int64', name='noise_chol_corr_dim_0'))

- noise_chol_corr_dim_1PandasIndex

PandasIndex(Int64Index([0, 1], dtype='int64', name='noise_chol_corr_dim_1'))

- noise_chol_stds_dim_0PandasIndex

PandasIndex(Int64Index([0, 1], dtype='int64', name='noise_chol_stds_dim_0'))

- created_at

- 2023-02-21T19:21:22.942792

- arviz_version

- 0.14.0

- inference_library

- pymc

- inference_library_version

- 5.0.1

- sampling_time

- 36.195340156555176

- tuning_steps

- 1000

<xarray.Dataset> Dimensions: (chain: 4, draw: 2000, equations: 2, lags: 2, cross_vars: 2, noise_chol_dim_0: 3, time: 49, betaX_dim_1: 2, noise_chol_corr_dim_0: 2, noise_chol_corr_dim_1: 2, noise_chol_stds_dim_0: 2) Coordinates: * chain (chain) int64 0 1 2 3 * draw (draw) int64 0 1 2 3 4 5 ... 1995 1996 1997 1998 1999 * equations (equations) <U7 'dl_gdp' 'dl_cons' * lags (lags) int64 1 2 * cross_vars (cross_vars) <U7 'dl_gdp' 'dl_cons' * noise_chol_dim_0 (noise_chol_dim_0) int64 0 1 2 * time (time) int64 2 3 4 5 6 7 8 9 ... 44 45 46 47 48 49 50 * betaX_dim_1 (betaX_dim_1) int64 0 1 * noise_chol_corr_dim_0 (noise_chol_corr_dim_0) int64 0 1 * noise_chol_corr_dim_1 (noise_chol_corr_dim_1) int64 0 1 * noise_chol_stds_dim_0 (noise_chol_stds_dim_0) int64 0 1 Data variables: lag_coefs (chain, draw, equations, lags, cross_vars) float64 ... alpha (chain, draw, equations) float64 0.01472 ... 0.01042 noise_chol (chain, draw, noise_chol_dim_0) float64 0.04206 ..... betaX (chain, draw, time, betaX_dim_1) float64 0.03846 .... noise_chol_corr (chain, draw, noise_chol_corr_dim_0, noise_chol_corr_dim_1) float64 ... noise_chol_stds (chain, draw, noise_chol_stds_dim_0) float64 0.042... Attributes: created_at: 2023-02-21T19:21:22.942792 arviz_version: 0.14.0 inference_library: pymc inference_library_version: 5.0.1 sampling_time: 36.195340156555176 tuning_steps: 1000xarray.Dataset -

- chain: 4

- draw: 2000

- 时间: 49

- equations: 2

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 ... 1996 1997 1998 1999

array([ 0, 1, 2, ..., 1997, 1998, 1999])

- 时间(time)int642 3 4 5 6 7 8 ... 45 46 47 48 49 50

array([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - equations(equations)<U7'dl_gdp' 'dl_cons'

array(['dl_gdp', 'dl_cons'], dtype='<U7')

- obs(chain, draw, time, equations)float640.07266 0.05554 ... 0.131 0.05994

array([[[[ 0.07266384, 0.05554043], [-0.03723739, -0.01044936], [ 0.06613757, 0.01211585], ..., [ 0.13244691, 0.06005397], [-0.02656575, 0.02116827], [ 0.07340778, 0.01205019]], [[ 0.08268452, 0.01698459], [ 0.02978633, 0.01275627], [ 0.01878881, 0.04764196], ..., [ 0.12656091, 0.00217327], [ 0.04914098, -0.00649668], [ 0.03080986, 0.05185062]], [[ 0.02621489, 0.00719224], [ 0.01166067, 0.0217407 ], [ 0.06380577, 0.06132611], ..., ... ..., [ 0.13366362, 0.0631761 ], [ 0.08309923, 0.10108214], [ 0.02315299, 0.02944125]], [[ 0.10004432, 0.08094177], [ 0.02037102, 0.08725727], [ 0.00521511, 0.03733144], ..., [ 0.05345503, 0.08313247], [ 0.03175078, 0.01423723], [ 0.16007207, -0.04465602]], [[ 0.05437818, 0.0252325 ], [ 0.08520019, 0.04364142], [ 0.02855142, 0.03297121], ..., [ 0.15104638, 0.08219397], [ 0.1100447 , 0.010313 ], [ 0.13102166, 0.05993663]]]])

- chainPandasIndex

PandasIndex(Int64Index([0, 1, 2, 3], dtype='int64', name='chain'))

- drawPandasIndex

PandasIndex(Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, ... 1990, 1991, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999], dtype='int64', name='draw', length=2000)) - 时间PandasIndex

PandasIndex(Int64Index([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50], dtype='int64', name='time')) - equationsPandasIndex

PandasIndex(Index(['dl_gdp', 'dl_cons'], dtype='object', name='equations'))

- created_at

- 2023-02-21T19:21:57.788979

- arviz_version

- 0.14.0

- inference_library

- pymc

- inference_library_version

- 5.0.1

<xarray.Dataset> Dimensions: (chain: 4, draw: 2000, time: 49, equations: 2) Coordinates: * chain (chain) int64 0 1 2 3 * draw (draw) int64 0 1 2 3 4 5 6 ... 1993 1994 1995 1996 1997 1998 1999 * time (time) int64 2 3 4 5 6 7 8 9 10 11 ... 42 43 44 45 46 47 48 49 50 * equations (equations) <U7 'dl_gdp' 'dl_cons' Data variables: obs (chain, draw, time, equations) float64 0.07266 ... 0.05994 Attributes: created_at: 2023-02-21T19:21:57.788979 arviz_version: 0.14.0 inference_library: pymc inference_library_version: 5.0.1xarray.Dataset -

- chain: 4

- draw: 2000

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 ... 1996 1997 1998 1999

array([ 0, 1, 2, ..., 1997, 1998, 1999])

- step_size(chain, draw)float640.2559 0.2559 ... 0.2865 0.2865

array([[0.25588962, 0.25588962, 0.25588962, ..., 0.25588962, 0.25588962, 0.25588962], [0.3697469 , 0.3697469 , 0.3697469 , ..., 0.3697469 , 0.3697469 , 0.3697469 ], [0.42101555, 0.42101555, 0.42101555, ..., 0.42101555, 0.42101555, 0.42101555], [0.28653149, 0.28653149, 0.28653149, ..., 0.28653149, 0.28653149, 0.28653149]]) - perf_counter_start(chain, draw)float641.626e+05 1.626e+05 ... 1.626e+05

array([[162631.64961679, 162631.65651296, 162631.66551496, ..., 162648.95160179, 162648.95829438, 162648.96578838], [162631.04184112, 162631.05123112, 162631.0605795 , ..., 162648.36295925, 162648.37161829, 162648.37843467], [162632.29036962, 162632.30043392, 162632.30901504, ..., 162649.81244104, 162649.81932071, 162649.8258015 ], [162631.24282437, 162631.25291775, 162631.26280779, ..., 162648.34229779, 162648.35076021, 162648.35829229]]) - acceptance_rate(chain, draw)float640.452 0.882 ... 0.6842 0.6953

array([[0.45200921, 0.88196032, 0.85477008, ..., 0.96927221, 0.84755787, 0.72968371], [0.98771315, 0.8009883 , 0.80420022, ..., 0.86016188, 0.75991948, 0.20906901], [0.99751756, 0.9866047 , 0.73547533, ..., 0.95386729, 0.73301308, 0.6478722 ], [0.97393312, 0.4316111 , 0.35684925, ..., 0.95519091, 0.6841993 , 0.69534805]]) - largest_eigval(chain, draw)float64nan nan nan nan ... nan nan nan nan

array([[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]) - lp(chain, draw)float64192.2 189.8 192.1 ... 185.6 191.9

array([[192.18966643, 189.81728435, 192.14034097, ..., 192.06668954, 193.42245096, 191.36656983], [190.79957544, 190.83997606, 187.12480105, ..., 185.29102408, 187.45700699, 188.40205797], [192.65934246, 194.23007138, 192.32551615, ..., 192.41982885, 189.68059219, 187.40005344], [193.08342569, 191.03132672, 188.24977915, ..., 191.53209216, 185.60644058, 191.94696886]]) - n_steps(chain, draw)float6415.0 15.0 15.0 ... 15.0 15.0 15.0

array([[15., 15., 15., ..., 15., 15., 15.], [15., 15., 15., ..., 15., 15., 15.], [15., 15., 15., ..., 15., 15., 15.], [15., 15., 15., ..., 15., 15., 15.]]) - perf_counter_diff(chain, draw)float640.006816 0.00892 ... 0.009416

array([[0.00681596, 0.00891987, 0.00853138, ..., 0.00662113, 0.00743258, 0.00674708], [0.00924708, 0.00926471, 0.00896663, ..., 0.00856346, 0.00672742, 0.00760842], [0.00998017, 0.0084875 , 0.00804771, ..., 0.00681546, 0.00641321, 0.00689512], [0.01000392, 0.0098055 , 0.00847175, ..., 0.008375 , 0.00745308, 0.00941558]]) - max_energy_error(chain, draw)float642.216 0.4151 0.2987 ... 1.156 1.252

array([[ 2.21643644, 0.41508426, 0.29868443, ..., -0.09954207, 0.39111492, 0.94939641], [-0.23714436, 0.34132827, 0.66750119, ..., 0.33884617, 1.38906123, 4.3288482 ], [-0.13750741, -0.07262999, 0.49443942, ..., -0.80694987, 0.63095501, 0.99504025], [-0.1666122 , 2.17550408, 3.13785432, ..., -0.33376487, 1.15567682, 1.25234077]]) - tree_depth(chain, draw)int644 4 4 4 4 4 4 4 ... 4 4 4 4 4 4 4 4

array([[4, 4, 4, ..., 4, 4, 4], [4, 4, 4, ..., 4, 4, 4], [4, 4, 4, ..., 4, 4, 4], [4, 4, 4, ..., 4, 4, 4]]) - step_size_bar(chain, draw)float640.2629 0.2629 ... 0.2741 0.2741

array([[0.26288954, 0.26288954, 0.26288954, ..., 0.26288954, 0.26288954, 0.26288954], [0.28887259, 0.28887259, 0.28887259, ..., 0.28887259, 0.28887259, 0.28887259], [0.26055628, 0.26055628, 0.26055628, ..., 0.26055628, 0.26055628, 0.26055628], [0.27413238, 0.27413238, 0.27413238, ..., 0.27413238, 0.27413238, 0.27413238]]) - smallest_eigval(chain, draw)float64nan nan nan nan ... nan nan nan nan

array([[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]) - energy_error(chain, draw)float640.1063 -0.1922 ... 0.6039 -0.8926

array([[ 0.10633161, -0.19223807, -0.07324313, ..., -0.05689863, 0.11804534, 0.04478887], [-0.11199666, 0.20578808, -0.1399356 , ..., 0.205265 , -0.25117668, 1.01038826], [-0.13750741, -0.03513089, 0.20506484, ..., -0.70430077, -0.01280797, -0.16463478], [-0.1666122 , 0.61632692, -0.48263151, ..., 0.10197503, 0.60388915, -0.89258914]]) - diverging(chain, draw)boolFalse False False ... False False

array([[False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False]]) - index_in_trajectory(chain, draw)int648 -6 -6 -6 10 ... -12 10 10 -10 -6

array([[ 8, -6, -6, ..., 7, -11, -10], [-15, 15, 9, ..., 12, 8, 4], [-14, 6, -12, ..., -13, 7, -6], [ 3, 6, 2, ..., 10, -10, -6]]) - energy(chain, draw)float64-186.7 -183.7 ... -182.6 -182.3

array([[-186.71221527, -183.69718813, -180.80066873, ..., -187.0691717 , -185.80251922, -185.85593343], [-186.62691362, -183.68157888, -180.83446734, ..., -180.21656638, -177.21042455, -175.34992831], [-185.6449068 , -189.74583709, -186.80186591, ..., -176.16387582, -182.34563041, -179.97120628], [-187.09037814, -183.89547442, -175.47726337, ..., -188.01409915, -182.5587388 , -182.28892311]]) - process_time_diff(chain, draw)float640.007787 0.01354 ... 0.01025

array([[0.007787, 0.013544, 0.01156 , ..., 0.00884 , 0.010774, 0.009 ], [0.010579, 0.011096, 0.009645, ..., 0.007559, 0.010655, 0.012674], [0.015694, 0.01198 , 0.01068 , ..., 0.009102, 0.008428, 0.009367], [0.013038, 0.014209, 0.010028, ..., 0.012215, 0.008676, 0.010252]]) - reached_max_treedepth(chain, draw)boolFalse False False ... False False

array([[False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False]])

- chainPandasIndex

PandasIndex(Int64Index([0, 1, 2, 3], dtype='int64', name='chain'))

- drawPandasIndex

PandasIndex(Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, ... 1990, 1991, 1992, 1993, 1994, 1995, 1996, 1997, 1998, 1999], dtype='int64', name='draw', length=2000))

- created_at

- 2023-02-21T19:21:22.951462

- arviz_version

- 0.14.0

- inference_library

- pymc

- inference_library_version

- 5.0.1

- sampling_time

- 36.195340156555176

- tuning_steps

- 1000

<xarray.Dataset> Dimensions: (chain: 4, draw: 2000) Coordinates: * chain (chain) int64 0 1 2 3 * draw (draw) int64 0 1 2 3 4 5 ... 1995 1996 1997 1998 1999 Data variables: (12/17) step_size (chain, draw) float64 0.2559 0.2559 ... 0.2865 0.2865 perf_counter_start (chain, draw) float64 1.626e+05 ... 1.626e+05 acceptance_rate (chain, draw) float64 0.452 0.882 ... 0.6842 0.6953 largest_eigval (chain, draw) float64 nan nan nan nan ... nan nan nan lp (chain, draw) float64 192.2 189.8 ... 185.6 191.9 n_steps (chain, draw) float64 15.0 15.0 15.0 ... 15.0 15.0 ... ... energy_error (chain, draw) float64 0.1063 -0.1922 ... -0.8926 diverging (chain, draw) bool False False False ... False False index_in_trajectory (chain, draw) int64 8 -6 -6 -6 10 ... 10 10 -10 -6 energy (chain, draw) float64 -186.7 -183.7 ... -182.6 -182.3 process_time_diff (chain, draw) float64 0.007787 0.01354 ... 0.01025 reached_max_treedepth (chain, draw) bool False False False ... False False Attributes: created_at: 2023-02-21T19:21:22.951462 arviz_version: 0.14.0 inference_library: pymc inference_library_version: 5.0.1 sampling_time: 36.195340156555176 tuning_steps: 1000xarray.Dataset -

- chain: 1

- draw: 500

- equations: 2

- noise_chol_corr_dim_0: 2

- noise_chol_corr_dim_1: 2

- noise_chol_stds_dim_0: 2

- 时间: 49

- betaX_dim_1: 2

- noise_chol_dim_0: 3

- lags: 2

- cross_vars: 2

- chain(chain)int640

array([0])

- draw(draw)int640 1 2 3 4 5 ... 495 496 497 498 499

array([ 0, 1, 2, ..., 497, 498, 499])

- equations(equations)<U7'dl_gdp' 'dl_cons'

array(['dl_gdp', 'dl_cons'], dtype='<U7')

- noise_chol_corr_dim_0(noise_chol_corr_dim_0)int640 1

array([0, 1])

- noise_chol_corr_dim_1(noise_chol_corr_dim_1)int640 1

array([0, 1])

- noise_chol_stds_dim_0(noise_chol_stds_dim_0)int640 1

array([0, 1])

- 时间(time)int642 3 4 5 6 7 8 ... 45 46 47 48 49 50

array([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - betaX_dim_1(betaX_dim_1)int640 1

array([0, 1])

- noise_chol_dim_0(noise_chol_dim_0)int640 1 2

array([0, 1, 2])

- lags(lags)int641 2

array([1, 2])

- cross_vars(cross_vars)<U7'dl_gdp' 'dl_cons'

array(['dl_gdp', 'dl_cons'], dtype='<U7')

- alpha(chain, draw, equations)float64-0.02173 -0.1333 ... 0.04148

array([[[-2.17321208e-02, -1.33334684e-01], [ 5.34931179e-02, 1.59499447e-01], [-9.49314248e-03, 5.64533641e-02], [-2.33126640e-02, -1.02705798e-01], [ 1.26733115e-01, 7.69344011e-02], [-1.80317474e-02, 6.57613825e-02], [-1.13071369e-01, 1.51406968e-01], [-1.54448261e-01, 1.31782276e-01], [-3.17081453e-02, 1.90268718e-02], [-1.18113054e-01, 1.62388440e-01], [ 7.05559903e-02, -1.19139213e-02], [ 1.33057457e-01, -3.39059486e-02], [-2.55956804e-02, -6.54044042e-02], [-8.17956874e-03, 1.98647714e-01], [ 5.57149931e-03, -4.32720912e-02], [ 8.87368333e-02, 1.71219288e-01], [ 2.10682139e-02, -1.24616571e-01], [-1.20201764e-03, -1.17523975e-01], [-9.64406169e-02, 6.16024940e-02], [ 8.76603291e-02, -8.72035128e-02], ... [ 1.37657923e-01, 1.27996799e-01], [ 5.13551369e-02, -1.31544671e-01], [ 1.00260415e-02, -1.07408884e-03], [-5.83019260e-02, -2.54488291e-02], [ 3.70272843e-02, 2.20626431e-01], [ 3.03187875e-02, 2.52427570e-02], [ 7.58630825e-02, -2.94336306e-01], [-2.36982827e-02, -8.84849345e-03], [-1.77442781e-02, -8.50522727e-03], [ 4.80910337e-02, 1.46007292e-02], [ 1.67488753e-01, 2.23774479e-01], [-4.09985360e-02, -1.48536396e-02], [ 4.67375840e-02, -2.03043589e-02], [-1.12377222e-01, -8.82438720e-02], [-9.90900254e-02, 1.36751395e-01], [-2.53327369e-02, -7.34878400e-02], [-4.96505019e-02, 8.64545788e-02], [ 3.48784048e-02, 2.66594474e-01], [ 2.83293109e-02, -7.74836476e-02], [ 7.36847485e-02, 4.14770386e-02]]]) - noise_chol_corr(chain, draw, noise_chol_corr_dim_0, noise_chol_corr_dim_1)float641.0 -0.2284 -0.2284 ... -0.5198 1.0

array([[[[ 1. , -0.22837651], [-0.22837651, 1. ]], [[ 1. , 0.82351754], [ 0.82351754, 1. ]], [[ 1. , 0.34327501], [ 0.34327501, 1. ]], ..., [[ 1. , 0.69452847], [ 0.69452847, 1. ]], [[ 1. , 0.42945802], [ 0.42945802, 1. ]], [[ 1. , -0.5198015 ], [-0.5198015 , 1. ]]]]) - noise_chol_stds(chain, draw, noise_chol_stds_dim_0)float640.4793 1.124 ... 0.01728 0.2597

array([[[4.79284896e-01, 1.12391550e+00], [6.97040739e-01, 9.54652181e-01], [1.59097130e+00, 3.31920295e-01], [1.15243377e+00, 7.82453571e-02], [5.53414777e-01, 2.06989481e-01], [2.14023145e+00, 4.47391867e-02], [2.60874906e-01, 1.49356985e+00], [1.20751677e+00, 5.06368239e-01], [2.93252219e-01, 9.22195628e-02], [5.59250055e-01, 8.46677717e-01], [3.33437205e-01, 5.50176517e-01], [1.67605175e-02, 3.56294802e-01], [2.30751158e-01, 1.10548076e+00], [1.55569836e+00, 9.90290581e-01], [1.41325046e+00, 1.01313301e+00], [7.50295560e-01, 6.17414964e-01], [5.22344641e-01, 7.23205322e-01], [1.41627088e+00, 8.49720046e-01], [1.80338965e+00, 1.65866311e+00], [3.62065401e-02, 3.03571965e+00], ... [1.51141877e+00, 1.44833737e+00], [1.10143071e-01, 4.36921244e-01], [1.53377987e+00, 8.92707864e-01], [7.70254088e-01, 2.15079477e+00], [1.24557997e-01, 1.00007158e+00], [1.26361991e+00, 1.12878392e-01], [2.11441792e-01, 6.76319368e-02], [7.25305848e-01, 2.20809866e+00], [2.95885724e-01, 1.33235181e+00], [9.91874436e-01, 9.99117482e-01], [9.37832970e-01, 8.39861991e-01], [1.54576189e-01, 2.57803495e-01], [1.05339644e+00, 2.84286425e-01], [1.87852079e-01, 6.49825798e-01], [1.41159170e-01, 2.71423310e-01], [1.58019583e+00, 1.23724822e+00], [8.82855209e-01, 2.96738277e+00], [3.47493609e-01, 2.50978228e+00], [1.11940315e+00, 6.25613502e-01], [1.72818848e-02, 2.59731360e-01]]]) - betaX(chain, draw, time, betaX_dim_1)float640.05801 0.1928 ... 0.1824 0.1416

array([[[[ 0.05801452, 0.19282852], [ 0.06136907, 0.19021009], [ 0.06498837, 0.12662961], ..., [ 0.1263401 , 0.22265808], [ 0.09465621, 0.1605613 ], [ 0.12072331, 0.0342081 ]], [[ 0.03458694, 0.04445992], [ 0.077954 , 0.03533871], [ 0.01711512, 0.03041004], ..., [ 0.09682593, 0.02314592], [ 0.09850186, 0.01292326], [-0.03044917, -0.00261849]], [[ 0.03363234, 0.0448288 ], [ 0.0509058 , 0.01335756], [ 0.0114371 , -0.01758728], ..., ... ..., [-0.03846538, 0.06103218], [-0.03609514, 0.01253422], [ 0.11130663, 0.03136471]], [[ 0.15524741, 0.02883929], [ 0.16256085, 0.05265778], [ 0.12545039, -0.00345506], ..., [ 0.14048099, 0.04771948], [ 0.11854582, 0.04748313], [ 0.01834497, -0.07335791]], [[ 0.13089945, -0.05260524], [ 0.15576556, -0.06532189], [ 0.20108248, -0.01115042], ..., [ 0.12102344, 0.0121013 ], [ 0.13921009, -0.00432658], [ 0.18239744, 0.14157838]]]]) - noise_chol(chain, draw, noise_chol_dim_0)float640.4793 -0.2567 ... -0.135 0.2219

array([[[ 0.4792849 , -0.2566759 , 1.09421366], [ 0.69704074, 0.78617282, 0.5415654 ], [ 1.5909713 , 0.11393994, 0.31175114], ..., [ 0.34749361, 1.74311526, 1.80570106], [ 1.11940315, 0.26867474, 0.56498331], [ 0.01728188, -0.13500875, 0.22188514]]]) - lag_coefs(chain, draw, equations, lags, cross_vars)float640.8718 -0.5211 ... 0.5638 -0.01947

array([[[[[ 0.87178822, -0.52109414], [ 0.81192969, 0.10257338]], [[ 1.66388457, 1.39492785], [ 0.2402051 , 0.0541874 ]]], [[[-0.30618212, 0.79757413], [ 1.30337738, -0.79747345]], [[ 0.38143198, 0.35600826], [-0.37832199, 0.309069 ]]], [[[-0.2397557 , 0.95264081], [ 0.11852561, -0.19028039]], [[ 0.88990685, 0.74043063], [-0.60850236, -0.71937965]]], ... [[[-0.33751836, -2.02597956], [ 1.06725697, -0.69540281]], [[ 1.2472135 , 0.17422232], [-0.62668472, -0.00886019]]], [[[ 0.89838374, 1.17917942], [-0.07513856, 0.81314769]], [[-0.23967996, 1.03413277], [ 0.52659114, -0.71997653]]], [[[ 0.5282788 , -0.52010047], [ 0.32594737, 2.63132424]], [[ 0.40303183, -1.73076034], [ 0.56375655, -0.01946577]]]]])

- chainPandasIndex

PandasIndex(Int64Index([0], dtype='int64', name='chain'))

- drawPandasIndex

PandasIndex(Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, ... 490, 491, 492, 493, 494, 495, 496, 497, 498, 499], dtype='int64', name='draw', length=500)) - equationsPandasIndex

PandasIndex(Index(['dl_gdp', 'dl_cons'], dtype='object', name='equations'))

- noise_chol_corr_dim_0PandasIndex

PandasIndex(Int64Index([0, 1], dtype='int64', name='noise_chol_corr_dim_0'))

- noise_chol_corr_dim_1PandasIndex

PandasIndex(Int64Index([0, 1], dtype='int64', name='noise_chol_corr_dim_1'))

- noise_chol_stds_dim_0PandasIndex

PandasIndex(Int64Index([0, 1], dtype='int64', name='noise_chol_stds_dim_0'))

- 时间PandasIndex

PandasIndex(Int64Index([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50], dtype='int64', name='time')) - betaX_dim_1PandasIndex

PandasIndex(Int64Index([0, 1], dtype='int64', name='betaX_dim_1'))

- noise_chol_dim_0PandasIndex

PandasIndex(Int64Index([0, 1, 2], dtype='int64', name='noise_chol_dim_0'))

- lagsPandasIndex

PandasIndex(Int64Index([1, 2], dtype='int64', name='lags'))

- cross_varsPandasIndex

PandasIndex(Index(['dl_gdp', 'dl_cons'], dtype='object', name='cross_vars'))

- created_at

- 2023-02-21T19:20:42.612680

- arviz_version

- 0.14.0

- inference_library

- pymc

- inference_library_version

- 5.0.1

<xarray.Dataset> Dimensions: (chain: 1, draw: 500, equations: 2, noise_chol_corr_dim_0: 2, noise_chol_corr_dim_1: 2, noise_chol_stds_dim_0: 2, time: 49, betaX_dim_1: 2, noise_chol_dim_0: 3, lags: 2, cross_vars: 2) Coordinates: * chain (chain) int64 0 * draw (draw) int64 0 1 2 3 4 5 ... 494 495 496 497 498 499 * equations (equations) <U7 'dl_gdp' 'dl_cons' * noise_chol_corr_dim_0 (noise_chol_corr_dim_0) int64 0 1 * noise_chol_corr_dim_1 (noise_chol_corr_dim_1) int64 0 1 * noise_chol_stds_dim_0 (noise_chol_stds_dim_0) int64 0 1 * time (time) int64 2 3 4 5 6 7 8 9 ... 44 45 46 47 48 49 50 * betaX_dim_1 (betaX_dim_1) int64 0 1 * noise_chol_dim_0 (noise_chol_dim_0) int64 0 1 2 * lags (lags) int64 1 2 * cross_vars (cross_vars) <U7 'dl_gdp' 'dl_cons' Data variables: alpha (chain, draw, equations) float64 -0.02173 ... 0.04148 noise_chol_corr (chain, draw, noise_chol_corr_dim_0, noise_chol_corr_dim_1) float64 ... noise_chol_stds (chain, draw, noise_chol_stds_dim_0) float64 0.479... betaX (chain, draw, time, betaX_dim_1) float64 0.05801 .... noise_chol (chain, draw, noise_chol_dim_0) float64 0.4793 ...... lag_coefs (chain, draw, equations, lags, cross_vars) float64 ... Attributes: created_at: 2023-02-21T19:20:42.612680 arviz_version: 0.14.0 inference_library: pymc inference_library_version: 5.0.1xarray.Dataset -

- chain: 1

- draw: 500

- 时间: 49

- equations: 2

- chain(chain)int640

array([0])

- draw(draw)int640 1 2 3 4 5 ... 495 496 497 498 499

array([ 0, 1, 2, ..., 497, 498, 499])

- 时间(time)int642 3 4 5 6 7 8 ... 45 46 47 48 49 50

array([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - equations(equations)<U7'dl_gdp' 'dl_cons'

array(['dl_gdp', 'dl_cons'], dtype='<U7')

- obs(chain, draw, time, equations)float640.4478 -0.1462 ... 0.2672 0.00633

array([[[[ 0.44776047, -0.14616646], [ 0.19862307, -1.56585955], [-0.49237353, 0.10341214], ..., [-0.53594629, 0.0108207 ], [-0.60594248, 1.1033946 ], [-0.29404625, -0.98134169]], [[-0.17508436, -0.39153604], [ 1.38455666, 0.46302004], [ 0.7422721 , 0.89894022], ..., [-0.34396897, 0.32617753], [-0.23282001, -0.28712153], [ 0.44837144, 0.56639172]], [[-2.87839455, -0.232425 ], [ 0.9220839 , -0.62541197], [-0.17169051, -0.02795315], ..., ... ..., [ 0.28226659, 1.30781422], [-0.06117757, -2.03648709], [-0.03451084, -0.82340422]], [[-0.1122591 , -0.0502724 ], [-1.00777064, 0.76872509], [ 1.5855008 , -1.32129034], ..., [-1.79166952, -0.68820344], [ 0.11846899, -0.78256063], [-2.06857488, -0.56486839]], [[ 0.18450661, 0.0350353 ], [ 0.20768096, 0.21245028], [ 0.29240891, 0.13727305], ..., [ 0.20834432, 0.04599256], [ 0.21634715, 0.27336735], [ 0.26716229, 0.00633042]]]])

- chainPandasIndex

PandasIndex(Int64Index([0], dtype='int64', name='chain'))

- drawPandasIndex

PandasIndex(Int64Index([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, ... 490, 491, 492, 493, 494, 495, 496, 497, 498, 499], dtype='int64', name='draw', length=500)) - 时间PandasIndex

PandasIndex(Int64Index([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50], dtype='int64', name='time')) - equationsPandasIndex

PandasIndex(Index(['dl_gdp', 'dl_cons'], dtype='object', name='equations'))

- created_at

- 2023-02-21T19:20:42.614050

- arviz_version

- 0.14.0

- inference_library

- pymc

- inference_library_version

- 5.0.1

<xarray.Dataset> Dimensions: (chain: 1, draw: 500, time: 49, equations: 2) Coordinates: * chain (chain) int64 0 * draw (draw) int64 0 1 2 3 4 5 6 7 ... 492 493 494 495 496 497 498 499 * time (time) int64 2 3 4 5 6 7 8 9 10 11 ... 42 43 44 45 46 47 48 49 50 * equations (equations) <U7 'dl_gdp' 'dl_cons' Data variables: obs (chain, draw, time, equations) float64 0.4478 -0.1462 ... 0.00633 Attributes: created_at: 2023-02-21T19:20:42.614050 arviz_version: 0.14.0 inference_library: pymc inference_library_version: 5.0.1xarray.Dataset -

- 时间: 49

- equations: 2

- 时间(time)int642 3 4 5 6 7 8 ... 45 46 47 48 49 50

array([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - equations(equations)<U7'dl_gdp' 'dl_cons'

array(['dl_gdp', 'dl_cons'], dtype='<U7')

- obs(time, equations)float640.04613 0.06834 ... 0.1264 0.05461

array([[ 0.04613358, 0.06834012], [ 0.04171979, 0.03041591], [ 0.05502444, 0.02790996], [ 0.0138517 , 0.02731819], [ 0.07891562, 0.05362182], [ 0.06940226, 0.08433703], [ 0.03026764, 0.04373127], [ 0.03032869, 0.02126963], [ 0.03271127, 0.01319935], [ 0.02257787, -0.04390915], [-0.00244599, 0.00498376], [ 0.04262235, 0.01226503], [ 0.03038967, 0.03741001], [-0.0042925 , 0.02157749], [ 0.04557635, 0.01055227], [ 0.05085863, 0.01996409], [ 0.05651188, 0.04522139], [ 0.08127144, 0.02305265], [ 0.01911258, 0.01992001], [ 0.03288602, 0.02865146], ... [ 0.05728948, 0.04324766], [ 0.02965185, 0.02806114], [ 0.06565545, 0.03202102], [ 0.05577724, 0.06123691], [ 0.04860445, 0.05797527], [ 0.05169516, 0.06437874], [-0.04590363, 0.00356559], [-0.05235144, -0.04743164], [ 0.01739857, -0.01160896], [ 0.01062858, 0.00262455], [-0.00052368, -0.017138 ], [ 0.01259055, -0.00163944], [ 0.08354761, 0.02976708], [ 0.22455252, 0.02970416], [ 0.02021659, 0.04809311], [ 0.0856272 , 0.02608167], [ 0.08645437, 0.04073803], [ 0.04799944, 0.04137956], [ 0.05701317, -0.05335559], [ 0.1264446 , 0.05460824]])

- 时间PandasIndex

PandasIndex(Int64Index([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50], dtype='int64', name='time')) - equationsPandasIndex

PandasIndex(Index(['dl_gdp', 'dl_cons'], dtype='object', name='equations'))

- created_at

- 2023-02-21T19:20:42.614347

- arviz_version

- 0.14.0

- inference_library

- pymc

- inference_library_version

- 5.0.1

<xarray.Dataset> Dimensions: (time: 49, equations: 2) Coordinates: * time (time) int64 2 3 4 5 6 7 8 9 10 11 ... 42 43 44 45 46 47 48 49 50 * equations (equations) <U7 'dl_gdp' 'dl_cons' Data variables: obs (time, equations) float64 0.04613 0.06834 ... 0.1264 0.05461 Attributes: created_at: 2023-02-21T19:20:42.614347 arviz_version: 0.14.0 inference_library: pymc inference_library_version: 5.0.1xarray.Dataset -

- 时间: 49

- equations: 2

- 时间(time)int642 3 4 5 6 7 8 ... 45 46 47 48 49 50

array([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50]) - equations(equations)<U7'dl_gdp' 'dl_cons'

array(['dl_gdp', 'dl_cons'], dtype='<U7')

- data_obs(time, equations)float640.04613 0.06834 ... 0.1264 0.05461

array([[ 0.04613358, 0.06834012], [ 0.04171979, 0.03041591], [ 0.05502444, 0.02790996], [ 0.0138517 , 0.02731819], [ 0.07891562, 0.05362182], [ 0.06940226, 0.08433703], [ 0.03026764, 0.04373127], [ 0.03032869, 0.02126963], [ 0.03271127, 0.01319935], [ 0.02257787, -0.04390915], [-0.00244599, 0.00498376], [ 0.04262235, 0.01226503], [ 0.03038967, 0.03741001], [-0.0042925 , 0.02157749], [ 0.04557635, 0.01055227], [ 0.05085863, 0.01996409], [ 0.05651188, 0.04522139], [ 0.08127144, 0.02305265], [ 0.01911258, 0.01992001], [ 0.03288602, 0.02865146], ... [ 0.05728948, 0.04324766], [ 0.02965185, 0.02806114], [ 0.06565545, 0.03202102], [ 0.05577724, 0.06123691], [ 0.04860445, 0.05797527], [ 0.05169516, 0.06437874], [-0.04590363, 0.00356559], [-0.05235144, -0.04743164], [ 0.01739857, -0.01160896], [ 0.01062858, 0.00262455], [-0.00052368, -0.017138 ], [ 0.01259055, -0.00163944], [ 0.08354761, 0.02976708], [ 0.22455252, 0.02970416], [ 0.02021659, 0.04809311], [ 0.0856272 , 0.02608167], [ 0.08645437, 0.04073803], [ 0.04799944, 0.04137956], [ 0.05701317, -0.05335559], [ 0.1264446 , 0.05460824]])

- 时间PandasIndex

PandasIndex(Int64Index([ 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50], dtype='int64', name='time')) - equationsPandasIndex

PandasIndex(Index(['dl_gdp', 'dl_cons'], dtype='object', name='equations'))

- created_at

- 2023-02-21T19:20:42.614628

- arviz_version

- 0.14.0

- inference_library

- pymc

- inference_library_version

- 5.0.1

<xarray.Dataset> Dimensions: (time: 49, equations: 2) Coordinates: * time (time) int64 2 3 4 5 6 7 8 9 10 11 ... 42 43 44 45 46 47 48 49 50 * equations (equations) <U7 'dl_gdp' 'dl_cons' Data variables: data_obs (time, equations) float64 0.04613 0.06834 ... 0.1264 0.05461 Attributes: created_at: 2023-02-21T19:20:42.614628 arviz_version: 0.14.0 inference_library: pymc inference_library_version: 5.0.1xarray.Dataset

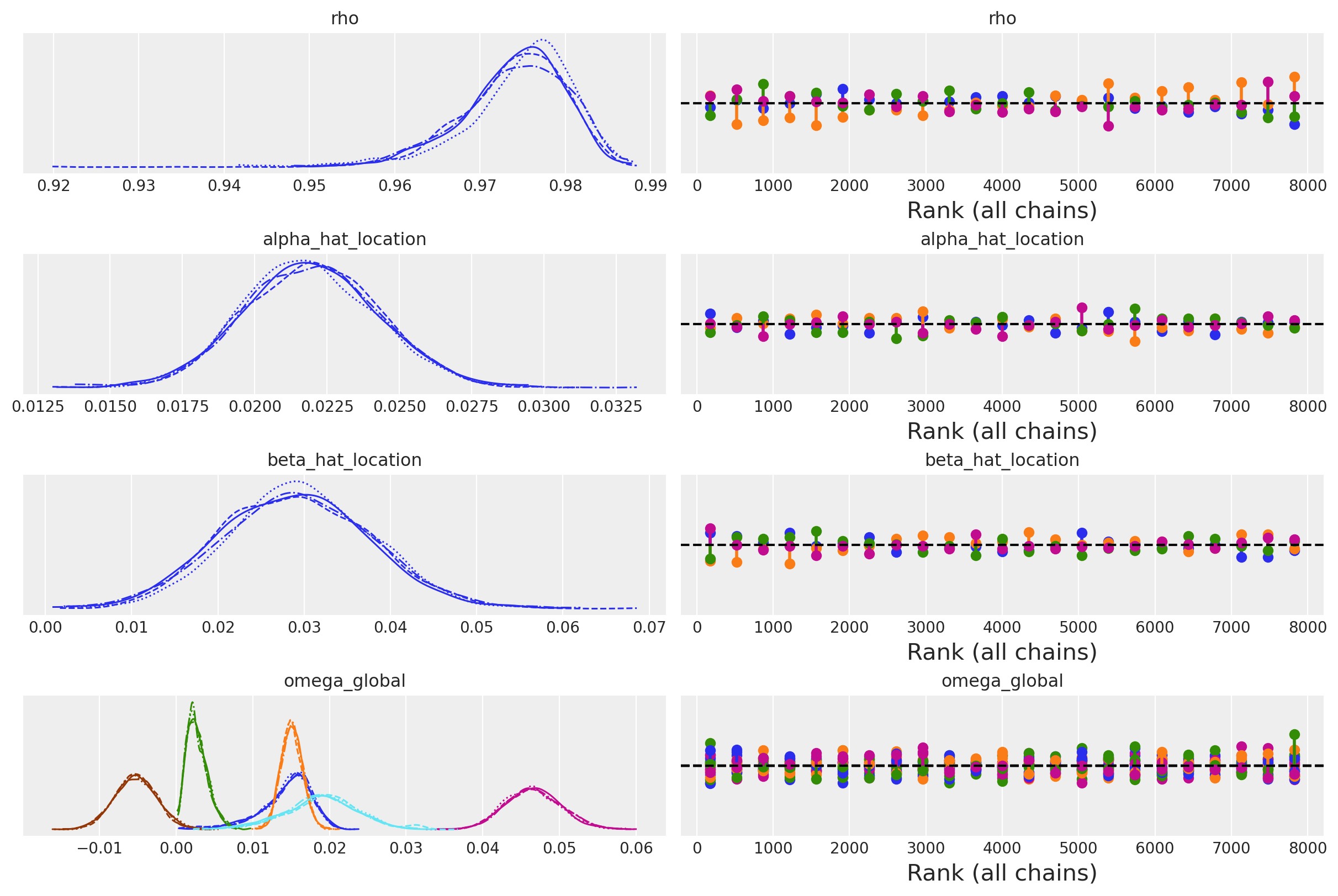

az.plot_trace(idata_ireland, var_names=["lag_coefs", "alpha", "betaX"], kind="rank_vlines");

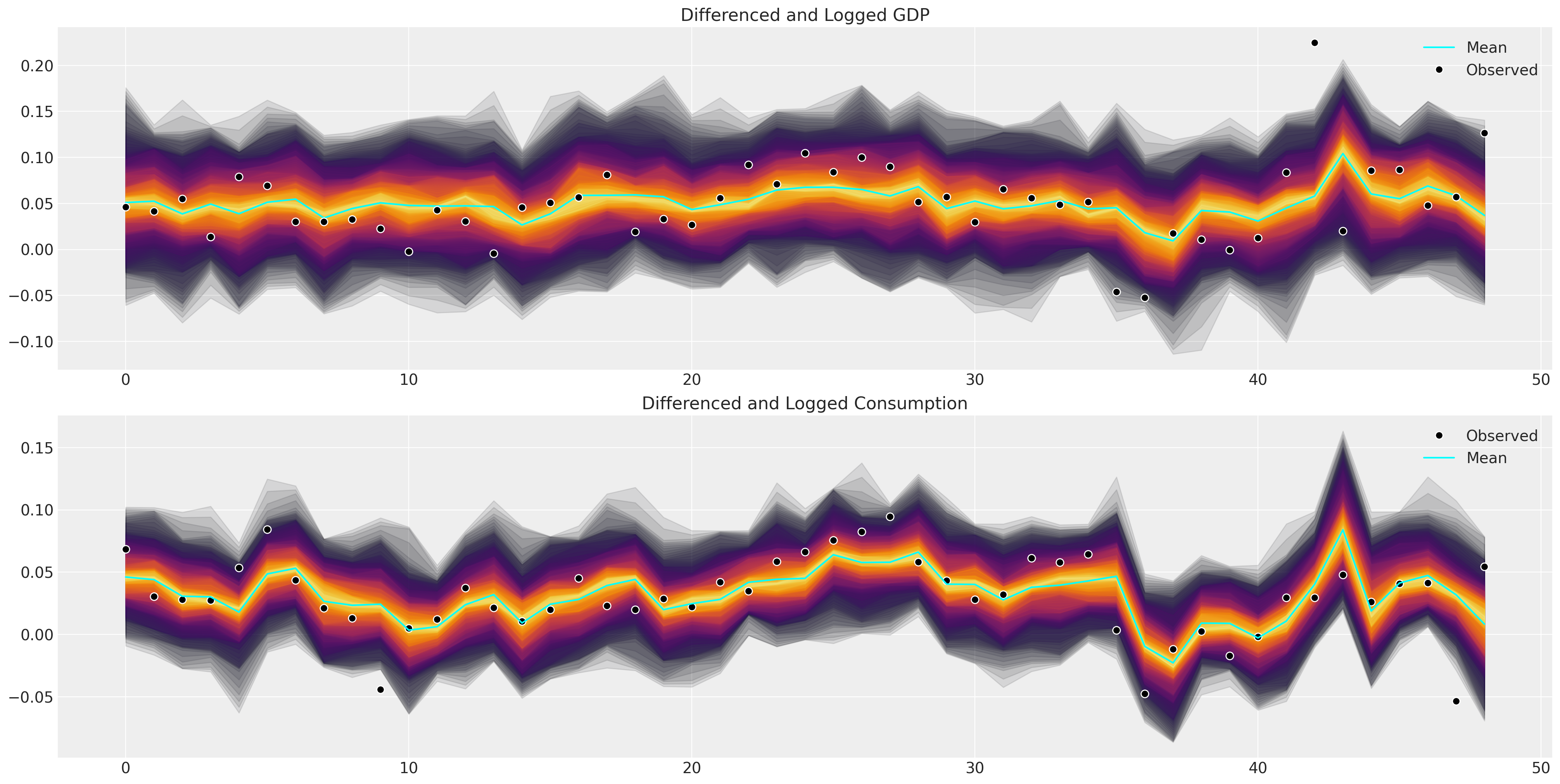

def plot_ppc_macro(idata, df, group="posterior_predictive"):

df = pd.DataFrame(idata["observed_data"]["obs"].data, columns=["dl_gdp", "dl_cons"])

fig, axs = plt.subplots(2, 1, figsize=(20, 10))

axs = axs.flatten()

ppc = az.extract(idata, group=group, num_samples=100)["obs"]

shade_background(ppc, axs, 0, "inferno")

axs[0].plot(np.arange(ppc.shape[0]), ppc[:, 0, :].mean(axis=1), color="cyan", label="Mean")

axs[0].plot(df["dl_gdp"], "o", mfc="black", mec="white", mew=1, markersize=7, label="Observed")

axs[0].set_title("Differenced and Logged GDP")

axs[0].legend()

shade_background(ppc, axs, 1, "inferno")

axs[1].plot(df["dl_cons"], "o", mfc="black", mec="white", mew=1, markersize=7, label="Observed")

axs[1].plot(np.arange(ppc.shape[0]), ppc[:, 1, :].mean(axis=1), color="cyan", label="Mean")

axs[1].set_title("Differenced and Logged Consumption")

axs[1].legend()

plot_ppc_macro(idata_ireland, ireland_df)

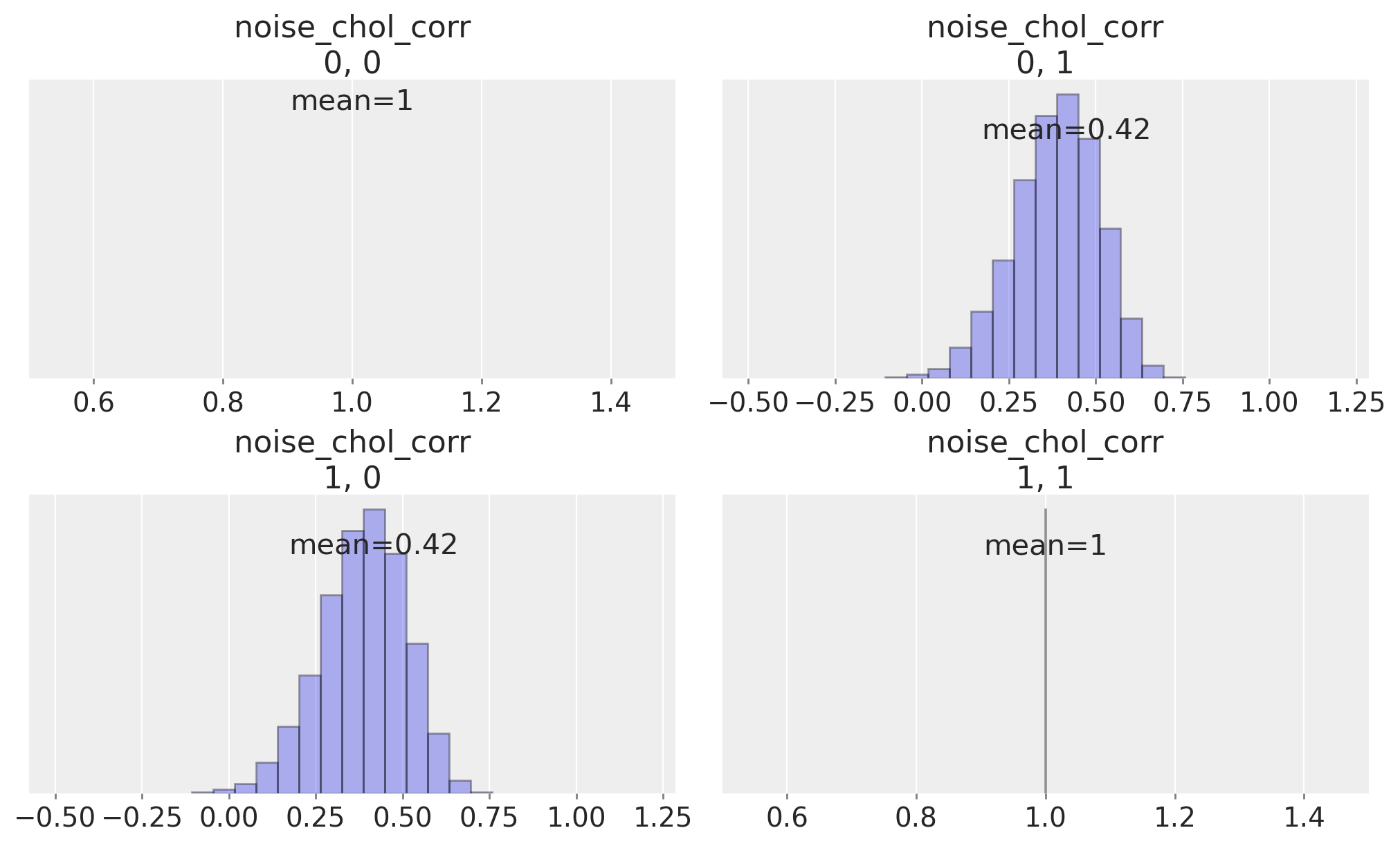

ax = az.plot_posterior(

idata_ireland,

var_names="noise_chol_corr",

hdi_prob="hide",

point_estimate="mean",

grid=(2, 2),

kind="hist",

ec="black",

figsize=(10, 6),

)

与 Statsmodels 的比较#

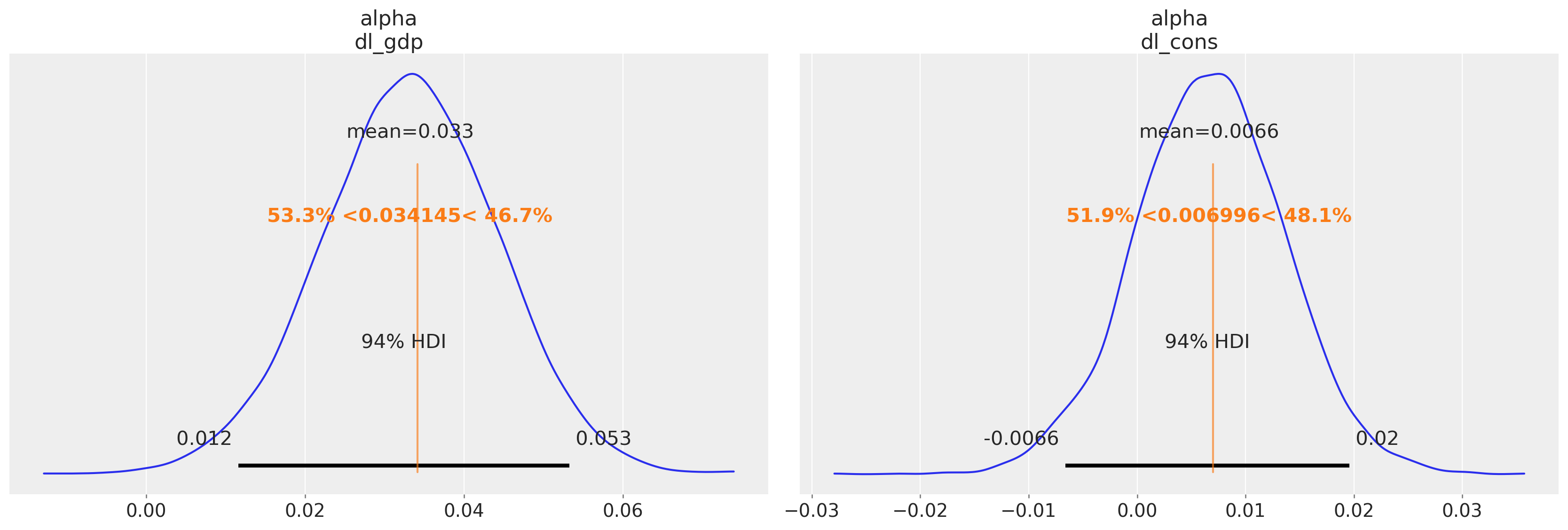

值得将这些模型拟合与 Statsmodels 实现的模型拟合进行比较,只是为了看看我们是否可以恢复类似的故事。

VAR_model = sm.tsa.VAR(ireland_df[["dl_gdp", "dl_cons"]])

results = VAR_model.fit(2, trend="c")

results.params

| dl_gdp | dl_cons | |

|---|---|---|

| const | 0.034145 | 0.006996 |

| L1.dl_gdp | 0.324904 | 0.330003 |

| L1.dl_cons | 0.076629 | 0.305824 |

| L2.dl_gdp | 0.137721 | -0.053677 |

| L2.dl_cons | -0.278745 | 0.033728 |

截距参数与我们的贝叶斯模型大致一致,但由滞后项的估计值定义的隐含关系存在一些差异。

corr = pd.DataFrame(results.resid_corr, columns=["dl_gdp", "dl_cons"])

corr.index = ["dl_gdp", "dl_cons"]

corr

| dl_gdp | dl_cons | |

|---|---|---|

| dl_gdp | 1.000000 | 0.435807 |

| dl_cons | 0.435807 | 1.000000 |

statsmodels 报告的残差相关性估计值与我们的贝叶斯模型中变量之间的多元高斯相关性非常吻合。

az.summary(idata_ireland, var_names=["alpha", "lag_coefs", "noise_chol_corr"])

/Users/nathanielforde/mambaforge/envs/myjlabenv/lib/python3.11/site-packages/arviz/stats/diagnostics.py:584: RuntimeWarning: invalid value encountered in scalar divide

(between_chain_variance / within_chain_variance + num_samples - 1) / (num_samples)

| 均值 | 标准差 | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| alpha[dl_gdp] | 0.033 | 0.011 | 0.012 | 0.053 | 0.000 | 0.000 | 6683.0 | 5919.0 | 1.0 |

| alpha[dl_cons] | 0.007 | 0.007 | -0.007 | 0.020 | 0.000 | 0.000 | 7651.0 | 5999.0 | 1.0 |

| lag_coefs[dl_gdp, 1, dl_gdp] | 0.321 | 0.170 | 0.008 | 0.642 | 0.002 | 0.002 | 6984.0 | 6198.0 | 1.0 |

| lag_coefs[dl_gdp, 1, dl_cons] | 0.071 | 0.273 | -0.447 | 0.582 | 0.003 | 0.003 | 7376.0 | 5466.0 | 1.0 |

| lag_coefs[dl_gdp, 2, dl_gdp] | 0.133 | 0.190 | -0.228 | 0.488 | 0.002 | 0.002 | 7471.0 | 6128.0 | 1.0 |

| lag_coefs[dl_gdp, 2, dl_cons] | -0.235 | 0.269 | -0.748 | 0.259 | 0.003 | 0.002 | 8085.0 | 5963.0 | 1.0 |

| lag_coefs[dl_cons, 1, dl_gdp] | 0.331 | 0.106 | 0.133 | 0.528 | 0.001 | 0.001 | 7670.0 | 6360.0 | 1.0 |

| lag_coefs[dl_cons, 1, dl_cons] | 0.302 | 0.170 | -0.012 | 0.616 | 0.002 | 0.001 | 7963.0 | 6150.0 | 1.0 |

| lag_coefs[dl_cons, 2, dl_gdp] | -0.054 | 0.118 | -0.279 | 0.163 | 0.001 | 0.001 | 8427.0 | 6296.0 | 1.0 |

| lag_coefs[dl_cons, 2, dl_cons] | 0.048 | 0.170 | -0.259 | 0.378 | 0.002 | 0.002 | 8669.0 | 6264.0 | 1.0 |

| noise_chol_corr[0, 0] | 1.000 | 0.000 | 1.000 | 1.000 | 0.000 | 0.000 | 8000.0 | 8000.0 | NaN |

| noise_chol_corr[0, 1] | 0.416 | 0.123 | 0.180 | 0.633 | 0.001 | 0.001 | 9155.0 | 6052.0 | 1.0 |

| noise_chol_corr[1, 0] | 0.416 | 0.123 | 0.180 | 0.633 | 0.001 | 0.001 | 9155.0 | 6052.0 | 1.0 |

| noise_chol_corr[1, 1] | 1.000 | 0.000 | 1.000 | 1.000 | 0.000 | 0.000 | 7871.0 | 8000.0 | 1.0 |

我们绘制 alpha 参数估计值与 Statsmodels 估计值的对比图

az.plot_posterior(idata_ireland, var_names=["alpha"], ref_val=[0.034145, 0.006996]);

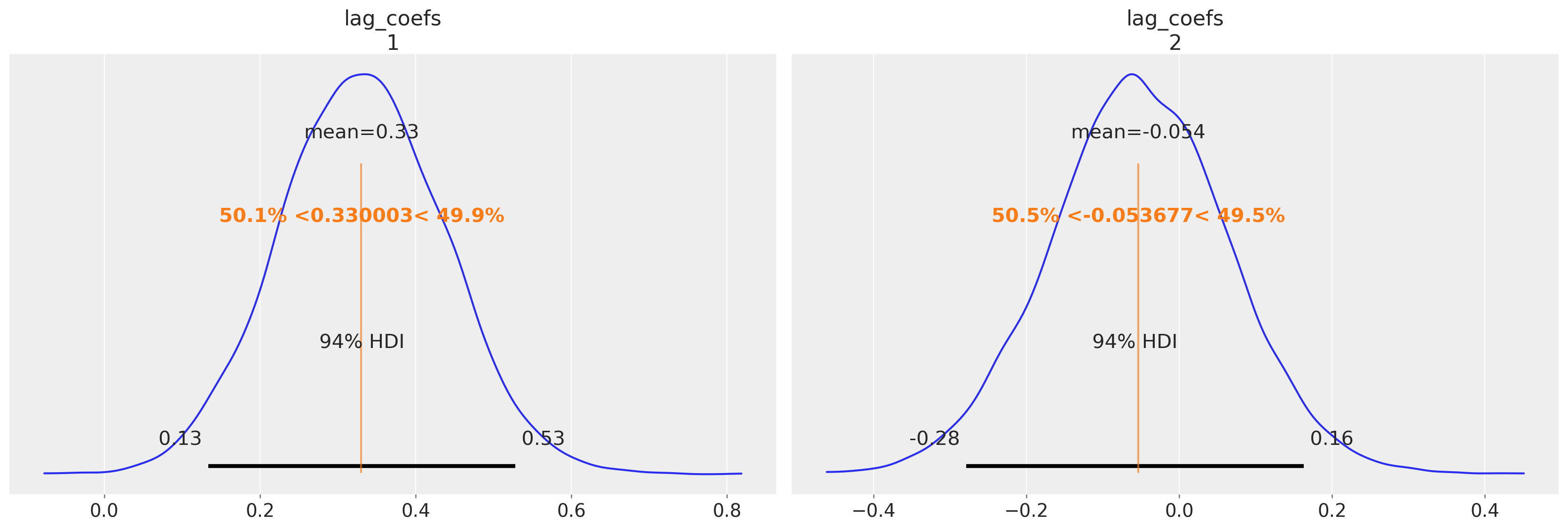

az.plot_posterior(

idata_ireland,

var_names=["lag_coefs"],

ref_val=[0.330003, -0.053677],

coords={"equations": "dl_cons", "lags": [1, 2], "cross_vars": "dl_gdp"},

);

我们再次可以看到贝叶斯 VAR 模型如何恢复许多相同的故事。两个方程的 alpha 项的估计值具有相似的量级,并且第一个滞后的 GDP 数字与消费之间存在明显的关系,以及非常相似的协方差结构。

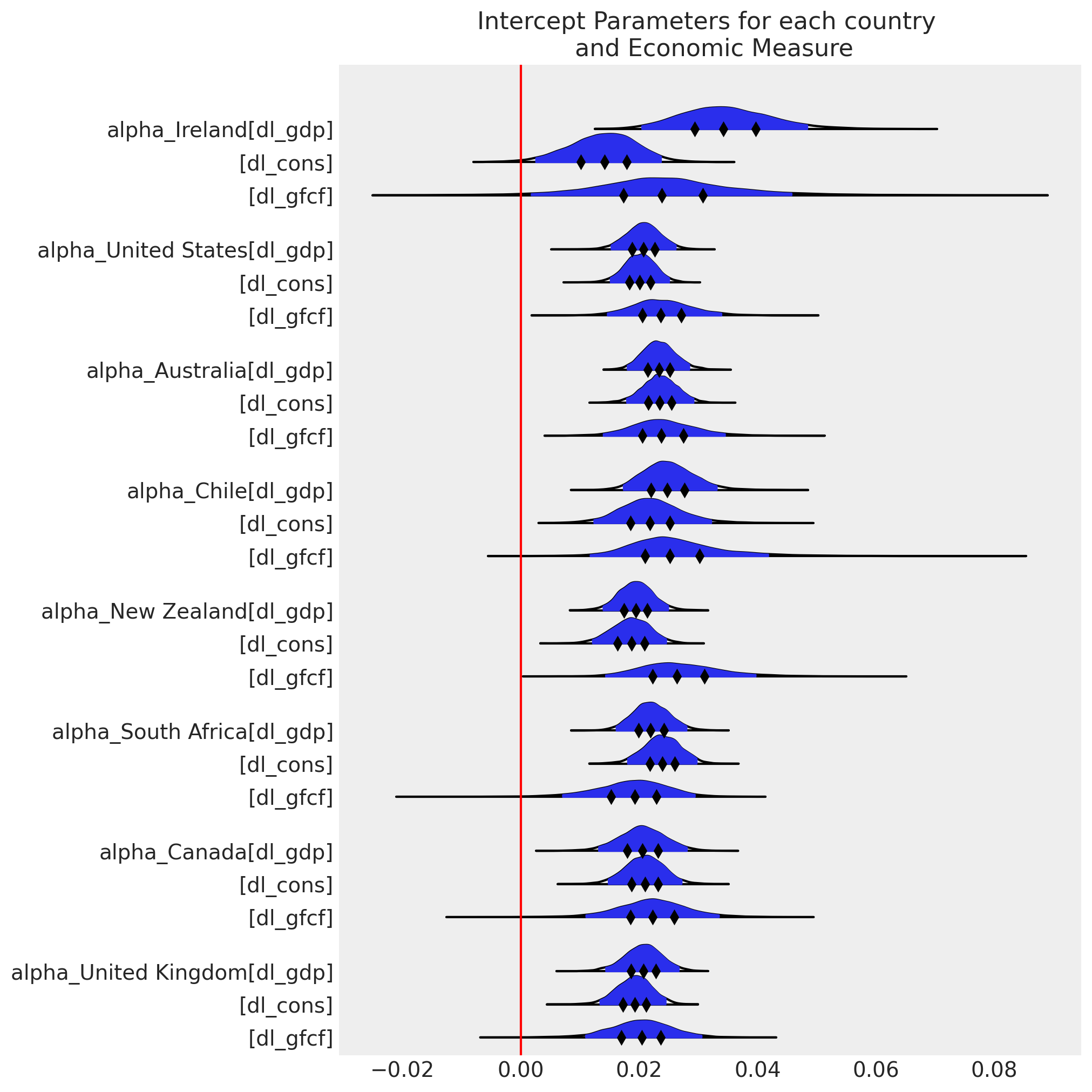

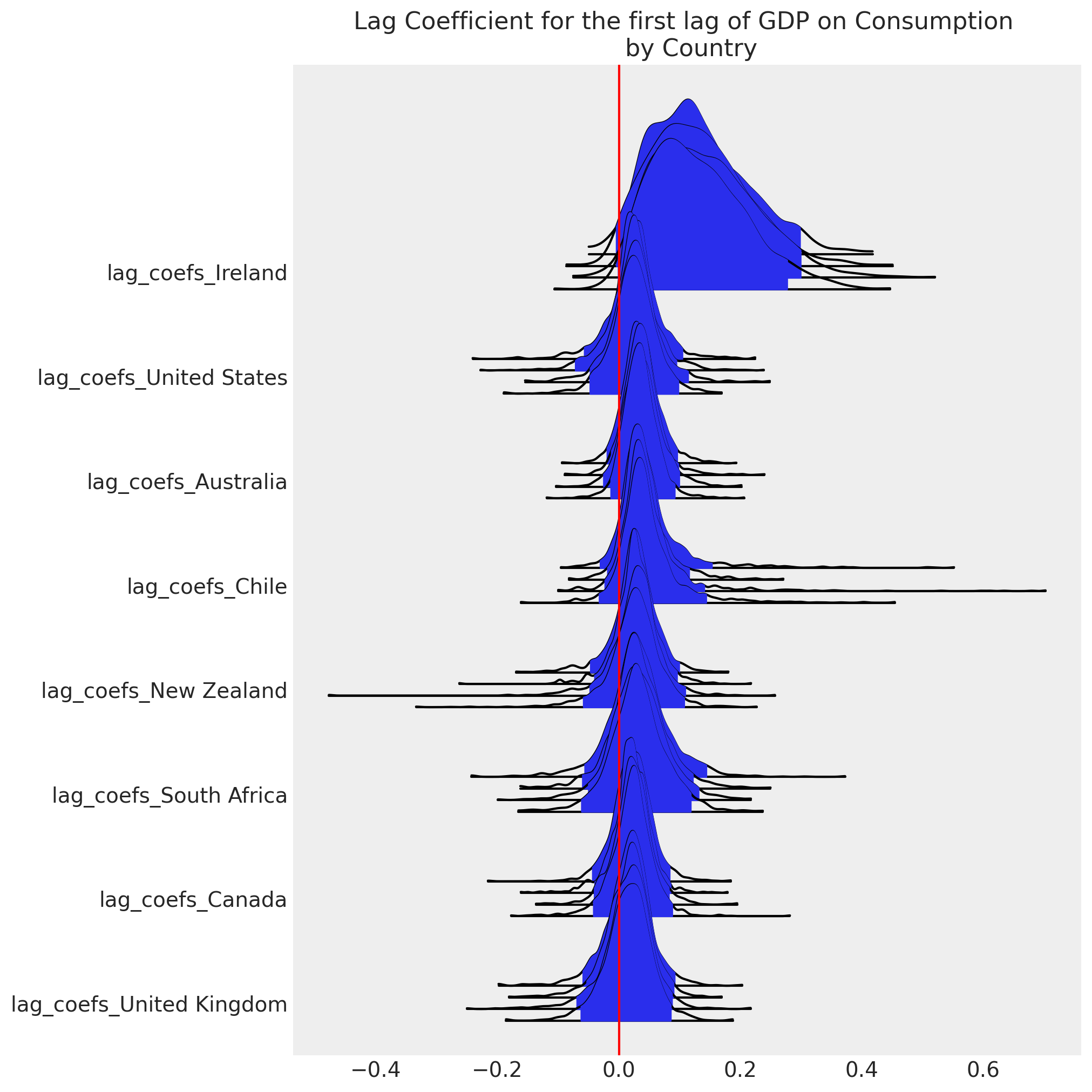

添加贝叶斯扭曲:分层 VAR#

此外,如果我们想对多个国家以及国家层面这些经济指标之间的关系进行建模,我们可以添加一些分层参数。这在我们的时间序列数据相当短的情况下是一种有用的技术,因为它允许我们“借用”跨国家的信息来告知关键参数的估计。

def make_hierarchical_model(n_lags, n_eqs, df, group_field, prior_checks=True):

cols = [col for col in df.columns if col != group_field]

coords = {"lags": np.arange(n_lags) + 1, "equations": cols, "cross_vars": cols}

groups = df[group_field].unique()

with pm.Model(coords=coords) as model:

## Hierarchical Priors

rho = pm.Beta("rho", alpha=2, beta=2)

alpha_hat_location = pm.Normal("alpha_hat_location", 0, 0.1)

alpha_hat_scale = pm.InverseGamma("alpha_hat_scale", 3, 0.5)

beta_hat_location = pm.Normal("beta_hat_location", 0, 0.1)

beta_hat_scale = pm.InverseGamma("beta_hat_scale", 3, 0.5)

omega_global, _, _ = pm.LKJCholeskyCov(

"omega_global", n=n_eqs, eta=1.0, sd_dist=pm.Exponential.dist(1)

)

for grp in groups:

df_grp = df[df[group_field] == grp][cols]

z_scale_beta = pm.InverseGamma(f"z_scale_beta_{grp}", 3, 0.5)

z_scale_alpha = pm.InverseGamma(f"z_scale_alpha_{grp}", 3, 0.5)

lag_coefs = pm.Normal(

f"lag_coefs_{grp}",

mu=beta_hat_location,

sigma=beta_hat_scale * z_scale_beta,

dims=["equations", "lags", "cross_vars"],

)

alpha = pm.Normal(

f"alpha_{grp}",

mu=alpha_hat_location,

sigma=alpha_hat_scale * z_scale_alpha,

dims=("equations",),

)

betaX = calc_ar_step(lag_coefs, n_eqs, n_lags, df_grp)

betaX = pm.Deterministic(f"betaX_{grp}", betaX)

mean = alpha + betaX

n = df_grp.shape[1]

noise_chol, _, _ = pm.LKJCholeskyCov(

f"noise_chol_{grp}", eta=10, n=n, sd_dist=pm.Exponential.dist(1)

)

omega = pm.Deterministic(f"omega_{grp}", rho * omega_global + (1 - rho) * noise_chol)

obs = pm.MvNormal(f"obs_{grp}", mu=mean, chol=omega, observed=df_grp.values[n_lags:])

if prior_checks:

idata = pm.sample_prior_predictive()

return model, idata

else:

idata = pm.sample_prior_predictive()

idata.extend(sample_blackjax_nuts(2000, random_seed=120))

pm.sample_posterior_predictive(idata, extend_inferencedata=True)

return model, idata

模型设计允许通过允许我们将特定国家的估计值从分层均值移开来非中心化关键似然的参数化,用于每个国家组成部分。这是通过 rho * omega_global + (1 - rho) * noise_chol 行完成的。参数 rho 确定每个国家的数据对经济变量之间协方差关系估计的贡献份额。类似的特定国家调整使用 z_alpha_scale 和 z_beta_scale 参数进行。

df_final = gdp_hierarchical[["country", "dl_gdp", "dl_cons", "dl_gfcf"]]

model_full_test, idata_full_test = make_hierarchical_model(

2,

3,

df_final,

"country",

prior_checks=False,

)

Sampling: [alpha_Australia, alpha_Canada, alpha_Chile, alpha_Ireland, alpha_New Zealand, alpha_South Africa, alpha_United Kingdom, alpha_United States, alpha_hat_location, alpha_hat_scale, beta_hat_location, beta_hat_scale, lag_coefs_Australia, lag_coefs_Canada, lag_coefs_Chile, lag_coefs_Ireland, lag_coefs_New Zealand, lag_coefs_South Africa, lag_coefs_United Kingdom, lag_coefs_United States, noise_chol_Australia, noise_chol_Canada, noise_chol_Chile, noise_chol_Ireland, noise_chol_New Zealand, noise_chol_South Africa, noise_chol_United Kingdom, noise_chol_United States, obs_Australia, obs_Canada, obs_Chile, obs_Ireland, obs_New Zealand, obs_South Africa, obs_United Kingdom, obs_United States, omega_global, rho, z_scale_alpha_Australia, z_scale_alpha_Canada, z_scale_alpha_Chile, z_scale_alpha_Ireland, z_scale_alpha_New Zealand, z_scale_alpha_South Africa, z_scale_alpha_United Kingdom, z_scale_alpha_United States, z_scale_beta_Australia, z_scale_beta_Canada, z_scale_beta_Chile, z_scale_beta_Ireland, z_scale_beta_New Zealand, z_scale_beta_South Africa, z_scale_beta_United Kingdom, z_scale_beta_United States]

Compiling...

Compilation time = 0:00:12.203665

Sampling...

Sampling time = 0:01:13.331452

Transforming variables...

Transformation time = 0:00:13.215405

Sampling: [obs_Australia, obs_Canada, obs_Chile, obs_Ireland, obs_New Zealand, obs_South Africa, obs_United Kingdom, obs_United States]

idata_full_test

-

- chain: 4

- draw: 2000

- equations: 3

- lags: 2

- cross_vars: 3

- omega_global_dim_0: 6

- noise_chol_Australia_dim_0: 6

- noise_chol_Canada_dim_0: 6

- noise_chol_Chile_dim_0: 6

- noise_chol_Ireland_dim_0: 6

- noise_chol_New Zealand_dim_0: 6

- noise_chol_South Africa_dim_0: 6

- noise_chol_United Kingdom_dim_0: 6

- noise_chol_United States_dim_0: 6

- omega_global_corr_dim_0: 3

- omega_global_corr_dim_1: 3

- omega_global_stds_dim_0: 3

- betaX_Australia_dim_0: 49

- betaX_Australia_dim_1: 3

- noise_chol_Australia_corr_dim_0: 3

- noise_chol_Australia_corr_dim_1: 3

- noise_chol_Australia_stds_dim_0: 3

- omega_Australia_dim_0: 3

- omega_Australia_dim_1: 3

- betaX_Canada_dim_0: 22

- betaX_Canada_dim_1: 3

- noise_chol_Canada_corr_dim_0: 3

- noise_chol_Canada_corr_dim_1: 3

- noise_chol_Canada_stds_dim_0: 3

- omega_Canada_dim_0: 3

- omega_Canada_dim_1: 3

- betaX_Chile_dim_0: 49

- betaX_Chile_dim_1: 3

- noise_chol_Chile_corr_dim_0: 3

- noise_chol_Chile_corr_dim_1: 3

- noise_chol_Chile_stds_dim_0: 3

- omega_Chile_dim_0: 3

- omega_Chile_dim_1: 3

- betaX_Ireland_dim_0: 49

- betaX_Ireland_dim_1: 3

- noise_chol_Ireland_corr_dim_0: 3

- noise_chol_Ireland_corr_dim_1: 3

- noise_chol_Ireland_stds_dim_0: 3

- omega_Ireland_dim_0: 3

- omega_Ireland_dim_1: 3

- betaX_New Zealand_dim_0: 41

- betaX_New Zealand_dim_1: 3

- noise_chol_New Zealand_corr_dim_0: 3

- noise_chol_New Zealand_corr_dim_1: 3

- noise_chol_New Zealand_stds_dim_0: 3

- omega_New Zealand_dim_0: 3

- omega_New Zealand_dim_1: 3

- betaX_South Africa_dim_0: 49

- betaX_South Africa_dim_1: 3

- noise_chol_South Africa_corr_dim_0: 3

- noise_chol_South Africa_corr_dim_1: 3

- noise_chol_South Africa_stds_dim_0: 3

- omega_South Africa_dim_0: 3

- omega_South Africa_dim_1: 3

- betaX_United Kingdom_dim_0: 49

- betaX_United Kingdom_dim_1: 3

- noise_chol_United Kingdom_corr_dim_0: 3

- noise_chol_United Kingdom_corr_dim_1: 3

- noise_chol_United Kingdom_stds_dim_0: 3

- omega_United Kingdom_dim_0: 3

- omega_United Kingdom_dim_1: 3

- betaX_United States_dim_0: 46

- betaX_United States_dim_1: 3

- noise_chol_United States_corr_dim_0: 3

- noise_chol_United States_corr_dim_1: 3

- noise_chol_United States_stds_dim_0: 3

- omega_United States_dim_0: 3

- omega_United States_dim_1: 3

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 ... 1996 1997 1998 1999

array([ 0, 1, 2, ..., 1997, 1998, 1999])

- equations(equations)<U7'dl_gdp' 'dl_cons' 'dl_gfcf'

array(['dl_gdp', 'dl_cons', 'dl_gfcf'], dtype='<U7')

- lags(lags)int641 2

array([1, 2])

- cross_vars(cross_vars)<U7'dl_gdp' 'dl_cons' 'dl_gfcf'

array(['dl_gdp', 'dl_cons', 'dl_gfcf'], dtype='<U7')

- omega_global_dim_0(omega_global_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- noise_chol_Australia_dim_0(noise_chol_Australia_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- noise_chol_Canada_dim_0(noise_chol_Canada_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- noise_chol_Chile_dim_0(noise_chol_Chile_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- noise_chol_Ireland_dim_0(noise_chol_Ireland_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- noise_chol_New Zealand_dim_0(noise_chol_New Zealand_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- noise_chol_South Africa_dim_0(noise_chol_South Africa_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- noise_chol_United Kingdom_dim_0(noise_chol_United Kingdom_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- noise_chol_United States_dim_0(noise_chol_United States_dim_0)int640 1 2 3 4 5

array([0, 1, 2, 3, 4, 5])

- omega_global_corr_dim_0(omega_global_corr_dim_0)int640 1 2

array([0, 1, 2])

- omega_global_corr_dim_1(omega_global_corr_dim_1)int640 1 2

array([0, 1, 2])

- omega_global_stds_dim_0(omega_global_stds_dim_0)int640 1 2

array([0, 1, 2])

- betaX_Australia_dim_0(betaX_Australia_dim_0)int640 1 2 3 4 5 6 ... 43 44 45 46 47 48

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48]) - betaX_Australia_dim_1(betaX_Australia_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_Australia_corr_dim_0(noise_chol_Australia_corr_dim_0)int640 1 2

array([0, 1, 2])

- noise_chol_Australia_corr_dim_1(noise_chol_Australia_corr_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_Australia_stds_dim_0(noise_chol_Australia_stds_dim_0)int640 1 2

array([0, 1, 2])

- omega_Australia_dim_0(omega_Australia_dim_0)int640 1 2

array([0, 1, 2])

- omega_Australia_dim_1(omega_Australia_dim_1)int640 1 2

array([0, 1, 2])

- betaX_Canada_dim_0(betaX_Canada_dim_0)int640 1 2 3 4 5 6 ... 16 17 18 19 20 21

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21]) - betaX_Canada_dim_1(betaX_Canada_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_Canada_corr_dim_0(noise_chol_Canada_corr_dim_0)int640 1 2

array([0, 1, 2])

- noise_chol_Canada_corr_dim_1(noise_chol_Canada_corr_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_Canada_stds_dim_0(noise_chol_Canada_stds_dim_0)int640 1 2

array([0, 1, 2])

- omega_Canada_dim_0(omega_Canada_dim_0)int640 1 2

array([0, 1, 2])

- omega_Canada_dim_1(omega_Canada_dim_1)int640 1 2

array([0, 1, 2])

- betaX_Chile_dim_0(betaX_Chile_dim_0)int640 1 2 3 4 5 6 ... 43 44 45 46 47 48

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48]) - betaX_Chile_dim_1(betaX_Chile_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_Chile_corr_dim_0(noise_chol_Chile_corr_dim_0)int640 1 2

array([0, 1, 2])

- noise_chol_Chile_corr_dim_1(noise_chol_Chile_corr_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_Chile_stds_dim_0(noise_chol_Chile_stds_dim_0)int640 1 2

array([0, 1, 2])

- omega_Chile_dim_0(omega_Chile_dim_0)int640 1 2

array([0, 1, 2])

- omega_Chile_dim_1(omega_Chile_dim_1)int640 1 2

array([0, 1, 2])

- betaX_Ireland_dim_0(betaX_Ireland_dim_0)int640 1 2 3 4 5 6 ... 43 44 45 46 47 48

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48]) - betaX_Ireland_dim_1(betaX_Ireland_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_Ireland_corr_dim_0(noise_chol_Ireland_corr_dim_0)int640 1 2

array([0, 1, 2])

- noise_chol_Ireland_corr_dim_1(noise_chol_Ireland_corr_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_Ireland_stds_dim_0(noise_chol_Ireland_stds_dim_0)int640 1 2

array([0, 1, 2])

- omega_Ireland_dim_0(omega_Ireland_dim_0)int640 1 2

array([0, 1, 2])

- omega_Ireland_dim_1(omega_Ireland_dim_1)int640 1 2

array([0, 1, 2])

- betaX_New Zealand_dim_0(betaX_New Zealand_dim_0)int640 1 2 3 4 5 6 ... 35 36 37 38 39 40

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40]) - betaX_New Zealand_dim_1(betaX_New Zealand_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_New Zealand_corr_dim_0(noise_chol_New Zealand_corr_dim_0)int640 1 2

array([0, 1, 2])

- noise_chol_New Zealand_corr_dim_1(noise_chol_New Zealand_corr_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_New Zealand_stds_dim_0(noise_chol_New Zealand_stds_dim_0)int640 1 2

array([0, 1, 2])

- omega_New Zealand_dim_0(omega_New Zealand_dim_0)int640 1 2

array([0, 1, 2])

- omega_New Zealand_dim_1(omega_New Zealand_dim_1)int640 1 2

array([0, 1, 2])

- betaX_South Africa_dim_0(betaX_South Africa_dim_0)int640 1 2 3 4 5 6 ... 43 44 45 46 47 48

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48]) - betaX_South Africa_dim_1(betaX_South Africa_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_South Africa_corr_dim_0(noise_chol_South Africa_corr_dim_0)int640 1 2

array([0, 1, 2])

- noise_chol_South Africa_corr_dim_1(noise_chol_South Africa_corr_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_South Africa_stds_dim_0(noise_chol_South Africa_stds_dim_0)int640 1 2

array([0, 1, 2])

- omega_South Africa_dim_0(omega_South Africa_dim_0)int640 1 2

array([0, 1, 2])

- omega_South Africa_dim_1(omega_South Africa_dim_1)int640 1 2

array([0, 1, 2])

- betaX_United Kingdom_dim_0(betaX_United Kingdom_dim_0)int640 1 2 3 4 5 6 ... 43 44 45 46 47 48

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48]) - betaX_United Kingdom_dim_1(betaX_United Kingdom_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_United Kingdom_corr_dim_0(noise_chol_United Kingdom_corr_dim_0)int640 1 2

array([0, 1, 2])

- noise_chol_United Kingdom_corr_dim_1(noise_chol_United Kingdom_corr_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_United Kingdom_stds_dim_0(noise_chol_United Kingdom_stds_dim_0)int640 1 2

array([0, 1, 2])

- omega_United Kingdom_dim_0(omega_United Kingdom_dim_0)int640 1 2

array([0, 1, 2])

- omega_United Kingdom_dim_1(omega_United Kingdom_dim_1)int640 1 2

array([0, 1, 2])

- betaX_United States_dim_0(betaX_United States_dim_0)int640 1 2 3 4 5 6 ... 40 41 42 43 44 45

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45]) - betaX_United States_dim_1(betaX_United States_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_United States_corr_dim_0(noise_chol_United States_corr_dim_0)int640 1 2

array([0, 1, 2])

- noise_chol_United States_corr_dim_1(noise_chol_United States_corr_dim_1)int640 1 2

array([0, 1, 2])

- noise_chol_United States_stds_dim_0(noise_chol_United States_stds_dim_0)int640 1 2

array([0, 1, 2])

- omega_United States_dim_0(omega_United States_dim_0)int640 1 2

array([0, 1, 2])

- omega_United States_dim_1(omega_United States_dim_1)int640 1 2

array([0, 1, 2])

- alpha_hat_location(chain, draw)float640.01867 0.02452 ... 0.02525 0.02439

array([[0.0186718 , 0.02451757, 0.02175917, ..., 0.02313991, 0.02264565, 0.02262757], [0.02194229, 0.01975299, 0.01973764, ..., 0.02332028, 0.02396733, 0.02430533], [0.02017885, 0.02004322, 0.01743769, ..., 0.02143034, 0.0220604 , 0.02622348], [0.01845575, 0.02208645, 0.01699277, ..., 0.02487232, 0.02524865, 0.02439422]]) - beta_hat_location(chain, draw)float640.03707 0.03328 ... 0.01597 0.02013

array([[0.03707199, 0.03328202, 0.03327573, ..., 0.02022314, 0.01849649, 0.02157836], [0.02625807, 0.03075176, 0.03222849, ..., 0.02858588, 0.01833262, 0.0215363 ], [0.03578886, 0.03454472, 0.03803878, ..., 0.02762439, 0.0293772 , 0.0225766 ], [0.02942673, 0.03678047, 0.03405673, ..., 0.02473977, 0.01597052, 0.0201329 ]]) - lag_coefs_Australia(chain, draw, equations, lags, cross_vars)float640.03359 0.02897 ... -0.04637

array([[[[[ 3.35876842e-02, 2.89656460e-02, 1.44346827e-02], [ 8.37580972e-02, 3.24134878e-02, -2.82482944e-02]], [[ 7.26090826e-02, -2.13751601e-02, 4.48405788e-02], [-8.62745446e-03, 6.50512197e-02, 3.53772829e-02]], [[ 1.33485138e-01, -1.01438292e-02, -3.84827532e-02], [ 8.71983706e-02, 4.94076769e-02, 1.65164026e-02]]], [[[-1.49945684e-02, 1.26595954e-01, 1.85231803e-02], [ 8.36634775e-02, -2.39762157e-02, -4.01462709e-02]], [[ 4.48792564e-03, 8.64514159e-02, 4.15399598e-02], [ 4.96201513e-02, 4.76304546e-02, -4.78025294e-03]], [[ 3.01187469e-02, 3.96725859e-03, 5.16570055e-02], [ 5.89717588e-02, 1.02783667e-01, -5.68183027e-02]]], ... [[[-6.09370080e-04, -4.28833919e-02, -1.72132262e-02], [ 8.35965353e-03, 4.55781405e-02, -2.42132324e-02]], [[ 4.88764274e-02, 6.16764673e-02, 1.71383871e-02], [ 3.83475664e-02, 6.11454731e-02, 1.19527231e-02]], [[-8.09687797e-02, 8.17902158e-03, -5.25417172e-03], [ 2.41407964e-02, 3.25160033e-03, -2.44207844e-02]]], [[[ 2.05738963e-02, -1.11911043e-02, -2.76806626e-02], [ 3.84035145e-02, 7.34061297e-02, 2.56814253e-02]], [[ 4.87554839e-02, 2.48335988e-02, -7.74723598e-03], [ 5.52062580e-02, 6.27619815e-02, 1.48180294e-03]], [[-8.57767638e-02, -5.80385978e-03, 3.99498228e-02], [ 1.82907424e-02, 5.53130978e-02, -4.63726851e-02]]]]]) - alpha_Australia(chain, draw, equations)float640.02263 0.02195 ... 0.0256 0.04313

array([[[0.02263033, 0.0219483 , 0.02278174], [0.02092655, 0.02117198, 0.01957458], [0.02471809, 0.02338723, 0.02126247], ..., [0.02733054, 0.02529274, 0.02926754], [0.0229278 , 0.02485852, 0.02744026], [0.02545857, 0.024065 , 0.02478534]], [[0.02535366, 0.02349025, 0.0258179 ], [0.02633209, 0.02612907, 0.02978084], [0.02298526, 0.02043026, 0.02110987], ..., [0.02911641, 0.01716411, 0.02245609], [0.02612856, 0.02059014, 0.02068046], [0.0288257 , 0.02033768, 0.02226378]], [[0.02258029, 0.02479371, 0.03093723], [0.0258095 , 0.02523357, 0.02888035], [0.02555444, 0.02835614, 0.02272297], ..., [0.02168698, 0.02232613, 0.02013229], [0.02085286, 0.02223427, 0.02259567], [0.02968412, 0.02811583, 0.03453368]], [[0.02283776, 0.02056642, 0.020418 ], [0.02121562, 0.02447455, 0.01693719], [0.01891216, 0.01366135, 0.01818819], ..., [0.03010412, 0.02400799, 0.03880659], [0.03082631, 0.02208602, 0.0357919 ], [0.02795351, 0.02560223, 0.04313025]]]) - lag_coefs_Canada(chain, draw, equations, lags, cross_vars)float640.07658 0.1244 ... 0.05222

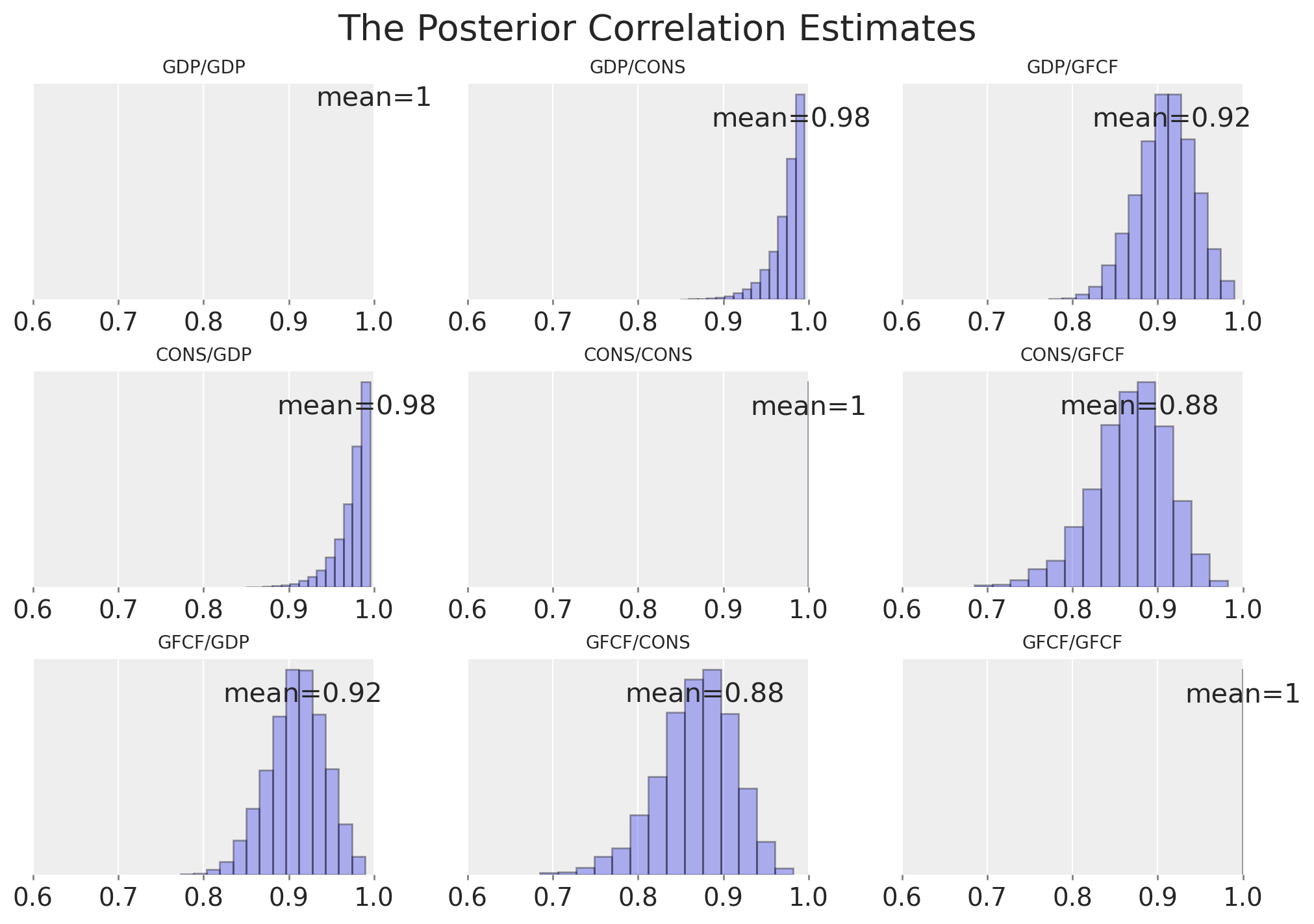

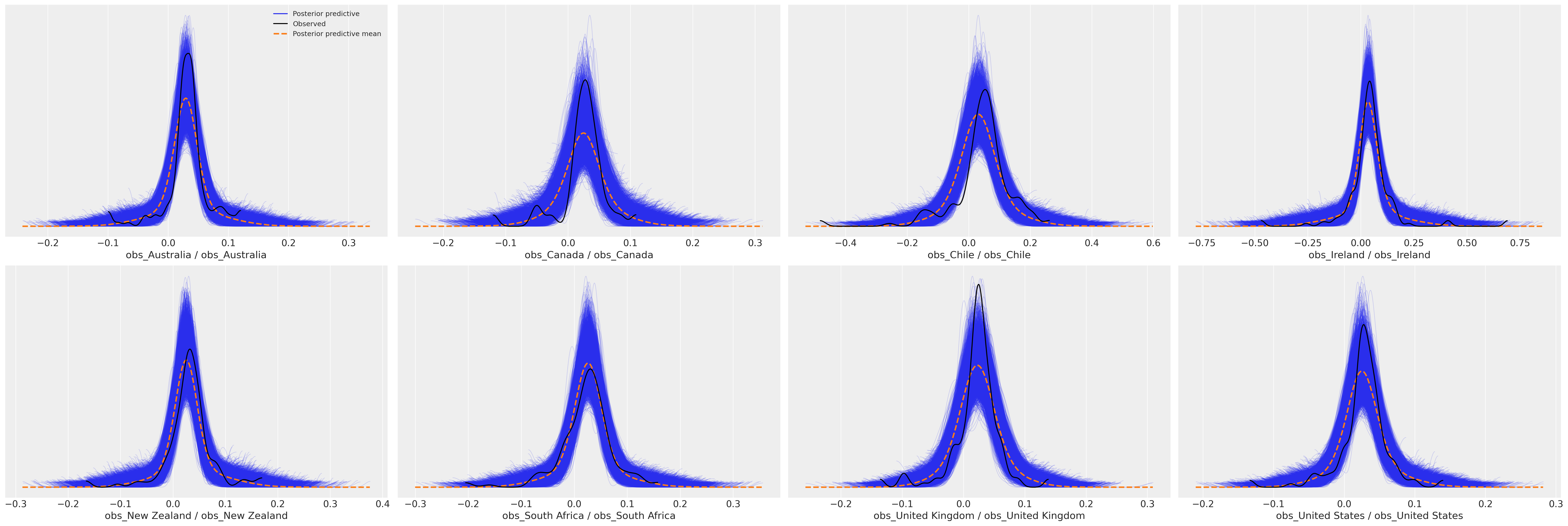

array([[[[[ 7.65771654e-02, 1.24359779e-01, 4.76910322e-02], [ 2.93667122e-02, 3.72310073e-02, 3.49173993e-02]], [[ 4.41347272e-02, 8.81811011e-03, -3.05038254e-04], [ 4.78927391e-02, -4.02214926e-03, 8.59285906e-03]], [[ 5.10979503e-02, 6.56671799e-02, -6.60947485e-02], [-6.07279826e-03, 4.58702563e-03, 7.23134454e-02]]], [[[ 9.58053837e-03, -3.61069775e-02, 6.42945320e-02], [ 3.85629973e-02, 3.81227043e-02, 3.71742576e-02]], [[ 1.99385509e-02, 6.05511924e-02, 4.11373442e-02], [ 4.40550718e-02, 8.55328657e-02, 5.53053572e-02]], [[ 1.73913842e-02, 3.34697407e-02, 1.16211321e-01], [ 6.83408791e-02, 4.04932208e-02, -4.08216894e-03]]], ... [[[ 1.60683243e-02, 2.61087715e-02, 3.92718077e-02], [ 4.01146237e-03, -2.37060312e-02, -4.62161483e-02]], [[ 1.56423894e-02, -4.50955622e-02, 3.51574636e-02], [ 5.25022469e-02, 1.23002896e-01, -8.16030734e-03]], [[ 7.57467809e-02, -2.31002172e-02, 5.49980542e-03], [ 9.01656608e-02, 3.48274765e-02, -2.31388941e-02]]], [[[-3.26630109e-02, 8.19079238e-02, 4.89127987e-02], [ 4.26865954e-02, 7.20299244e-03, 4.26620550e-04]], [[-2.17148943e-02, 1.58301049e-02, 1.81258805e-02], [-9.93058176e-03, 6.71043977e-02, -2.33663183e-02]], [[ 4.33928770e-02, 8.33936906e-02, -3.14980137e-02], [ 2.91290443e-02, -4.29921752e-03, 5.22202592e-02]]]]]) - alpha_Canada(chain, draw, equations)float640.01466 0.0211 ... 0.02472 0.01323